dataset_no = 5 # Re-run this for datasets 1-5 to see how it performs

cutoff = 40 # 40 is the (hard-coded) cut-off as set out previously

cv_runs = 1 # Testing only: 4, proper runs of the models: 24

glm_iter = 500 # 500 epochs for gradient descent fitting of GLMs is very quick

nn_iter = 500 # 100 for experimentation, 500 appears to give models that have fully converged or are close to convergence.

nn_cv_iter = 100 # Lower for CV to save running time

mdn_iter = 500 # Latest architecture and loss converges fairly quicklyIntroduction

In an upcoming talk I’ll be looking at the use of neural networks in individual claims modelling. I start with a Chain Ladder model and progressively expand it into an deep learning individual claim model while still having sensible results. Additionally, there is a benchmark against a GBM model as well. I use a number of different synthetic data sets for this purpose, the first satisfying Chain Ladder assumptions and the remaining ones breaking these assumptions in different ways.

My talk is supported by 5 freely available Jupyter notebooks for each of the data sets so please refer to these if you want to run the code. These may be downloaded as this zip file

The remainder of this post covers the contents and results of the notebook for data set 5.

The notebook is long but structured into sections as follows:

- Data: Read in SPLICE data (including case estimates) and create additional fields, test and train datasets,

- Chain ladder: Directly calculate Chain Ladder factors as a baseline,

- Chain ladder (GLM) Recreate Chain Ladder results, with a GLM formulation,

- Individual GLM Convert the GLM into a very basic individual claims model,

- Individual GLM with splines Replace the overparametrized one-hot encoding with splines,

- Residual network Consider a ResNet,

- Spline-based individual network: Create a customised neural network architecture with similar behaviour to the individual GLM with splines,

- Spline-based Individual Log-normal Mixture Density Network: Introduce a MDN-based network, with modifications to accommodate a log-normal distribution,

- Rolling origin cross-validation and parameter search,

- Additional data augmentation for training individual claim features,

- Detailed individual models using claim features, including a hyperparameter tuned GBM as a benchmark,

- Review and summary.

!pip show pandas numpy scikit-learn torch matplotlibName: pandas

Version: 1.5.2

Summary: Powerful data structures for data analysis, time series, and statistics

Home-page: https://pandas.pydata.org

Author: The Pandas Development Team

Author-email: pandas-dev@python.org

License: BSD-3-Clause

Location: /Users/jacky/GitRepos/NNs-on-Individual-Claims/.conda/lib/python3.10/site-packages

Requires: numpy, python-dateutil, pytz

Required-by: catboost, category-encoders, chainladder, cmdstanpy, darts, datasets, nfoursid, pmdarima, prophet, pytorch-tabular, seaborn, shap, statsforecast, statsmodels, xarray

---

Name: numpy

Version: 1.23.5

Summary: NumPy is the fundamental package for array computing with Python.

Home-page: https://www.numpy.org

Author: Travis E. Oliphant et al.

Author-email:

License: BSD

Location: /Users/jacky/GitRepos/NNs-on-Individual-Claims/.conda/lib/python3.10/site-packages

Requires:

Required-by: catboost, category-encoders, cmdstanpy, contourpy, darts, datasets, duckdb, lightgbm, matplotlib, nfoursid, numba, pandas, patsy, pmdarima, prophet, pyarrow, pyod, pytorch-lightning, pytorch-tabnet, pytorch-tabular, ray, scikit-learn, scikit-optimize, scipy, seaborn, shap, skorch, sparse, statsforecast, statsmodels, tbats, tensorboard, tensorboardX, torchmetrics, tune-sklearn, xarray, xgboost

---

Name: scikit-learn

Version: 1.2.0

Summary: A set of python modules for machine learning and data mining

Home-page: http://scikit-learn.org

Author:

Author-email:

License: new BSD

Location: /Users/jacky/GitRepos/NNs-on-Individual-Claims/.conda/lib/python3.10/site-packages

Requires: joblib, numpy, scipy, threadpoolctl

Required-by: category-encoders, chainladder, darts, lightgbm, pmdarima, pyod, pytorch-tabnet, pytorch-tabular, scikit-optimize, shap, skorch, tbats, tune-sklearn

---

Name: torch

Version: 1.13.1

Summary: Tensors and Dynamic neural networks in Python with strong GPU acceleration

Home-page: https://pytorch.org/

Author: PyTorch Team

Author-email: packages@pytorch.org

License: BSD-3

Location: /Users/jacky/GitRepos/NNs-on-Individual-Claims/.conda/lib/python3.10/site-packages

Requires: typing-extensions

Required-by: darts, pytorch-lightning, pytorch-tabnet, pytorch-tabular, torchmetrics

---

Name: matplotlib

Version: 3.6.2

Summary: Python plotting package

Home-page: https://matplotlib.org

Author: John D. Hunter, Michael Droettboom

Author-email: matplotlib-users@python.org

License: PSF

Location: /Users/jacky/GitRepos/NNs-on-Individual-Claims/.conda/lib/python3.10/site-packages

Requires: contourpy, cycler, fonttools, kiwisolver, numpy, packaging, pillow, pyparsing, python-dateutil

Required-by: catboost, chainladder, darts, nfoursid, prophet, pyod, pytorch-tabular, seaborn, statsforecastimport pandas as pd

import numpy as np

from matplotlib import pyplot as plt

# from torch.utils.data.sampler import BatchSampler, RandomSampler

# from torch.utils.data import DataLoader

import torch

import torch.nn as nn

from torch.nn import functional as F

from torch.autograd import Variable

from sklearn.compose import ColumnTransformer, TransformedTargetRegressor

from sklearn.preprocessing import OneHotEncoder, StandardScaler, MinMaxScaler, SplineTransformer

from sklearn.pipeline import Pipeline

from sklearn.metrics import mean_squared_error

from sklearn.base import BaseEstimator, RegressorMixin, TransformerMixin

from sklearn.utils.validation import check_X_y, check_array, check_is_fitted

from sklearn.ensemble import HistGradientBoostingRegressor

from sklearn.model_selection import GridSearchCV, RandomizedSearchCV, PredefinedSplit

# from skopt import BayesSearchCV

import mathpd.options.display.float_format = '{:,.2f}'.formatScikit Module

This has been used in past experiments so it is basically my boilerplate code. With minor tweaks.

class TabularNetRegressor(BaseEstimator, RegressorMixin):

def __init__(

self,

module,

criterion=nn.PoissonNLLLoss,

max_iter=100,

max_lr=0.01,

keep_best_model=False,

batch_function=None,

rebatch_every_iter=1,

n_hidden=20,

l1_penalty=0.0, # lambda is a reserved word

l1_applies_params=["linear.weight", "hidden.weight"],

weight_decay=0.0,

batch_norm=False,

interactions=True,

dropout=0.0,

clip_value=None,

n_gaussians=3,

verbose=1,

device="cpu" if torch.backends.mps.is_available() else ("cuda" if torch.cuda.is_available() else "cpu"), # Use GPU if available, leave mps off until more stable

init_extra=None,

**kwargs

):

""" Tabular Neural Network Regressor (for Claims Reserving)

This trains a neural network with specified loss, Log Link and l1 LASSO penalties

using Pytorch. It has early stopping.

Args:

module: pytorch nn.Module. Should have n_input and n_output as parameters and

if l1_penalty, init_weight, or init_bias are used, a final layer

called "linear".

criterion: pytorch loss function. Consider nn.PoissonNLLLoss for log link.

max_iter (int): Maximum number of epochs before training stops.

Previously this used a high value for triangles since the record count is so small.

For larger regression problems, a lower number of iterations may be sufficient.

max_lr (float): Min / Max learning rate - we will use one_cycle_lr

keep_best_model (bool): If true, keep and use the model weights with the best loss rather

than the final weights.

batch_function (None or fn): If not None, used to get a batch from X and y

rebatch_every_iter (int): redo batches every

l1_penalty (float): l1 penalty factor. If not zero, is applied to

the layers in the Module with names matching l1_applies_params.

(we use l1_penalty because lambda is a reserved word in Python

for anonymous functions)

weight_decay (float): weight decay - analogous to l2 penalty factor

Applied to all weights

clip_value (None or float): clip gradient norms at a particular value

n_hidden (int), batch_norm(bool), dropout (float), interactions(bool), n_gaussians(int):

Passed to module. Hidden layer size, batch normalisation, dropout percentages, and interactions flag.

init_extra (coerces to torch.Tensor): set init_bias, passed to module. If none, default to np.log(y.mean()).values.astype(np.float32)

verbose (int): 0 means don't print. 1 means do print.

"""

self.module = module

self.criterion = criterion

self.keep_best_model = keep_best_model

self.l1_penalty = l1_penalty

self.l1_applies_params = l1_applies_params

self.weight_decay = weight_decay

self.max_iter = max_iter

self.n_hidden = n_hidden

self.batch_norm = batch_norm

self.batch_function = batch_function

self.rebatch_every_iter = rebatch_every_iter

self.interactions = interactions

self.dropout = dropout

self.n_gaussians = n_gaussians

self.device = device

self.target_device = torch.device(device)

self.max_lr = max_lr

self.init_extra = init_extra

self.print_loss_every_iter = max(1, int(max_iter / 10))

self.verbose = verbose

self.clip_value = clip_value

self.kwargs = kwargs

def fix_array(self, y):

"Need to be picky about array formats"

if isinstance(y, pd.DataFrame) or isinstance(y, pd.Series):

y = y.values

if y.ndim == 1:

y = y.reshape(-1, 1)

y = y.astype(np.float32)

return y

def setup_module(self, n_input, n_output):

# Training new model

self.module_ = self.module(

n_input=n_input,

n_output=n_output,

n_hidden=self.n_hidden,

batch_norm=self.batch_norm,

dropout=self.dropout,

interactions_trainable=self.interactions,

n_gaussians=self.n_gaussians,

init_bias=self.init_bias_calc,

init_extra=self.init_extra,

**self.kwargs

).to(self.target_device)

def fit(self, X, y):

# The main fit logic is in partial_fit

# We will try a few times if numbers explode because NN's are finicky and we are doing CV

n_input = X.shape[-1]

n_output = 1 if y.ndim == 1 else y.shape[-1]

self.init_bias_calc = np.log(y.mean()).values.astype(np.float32)

self.setup_module(n_input=n_input, n_output=n_output)

# Partial fit means you take an existing model and keep training

# so the logic is basically the same

self.partial_fit(X, y)

return self

def partial_fit(self, X, y):

# Check that X and y have correct shape

X, y = check_X_y(X, y, multi_output=True)

# Convert to Pytorch Tensor

X_tensor = torch.from_numpy(self.fix_array(X)).to(self.target_device)

y_tensor = torch.from_numpy(self.fix_array(y)).to(self.target_device)

# Optimizer - the generically useful AdamW. Other options like SGD

# are also possible.

optimizer = torch.optim.AdamW(

params=self.module_.parameters(),

lr=self.max_lr / 10,

weight_decay=self.weight_decay

)

# Scheduler - one cycle LR

scheduler = torch.optim.lr_scheduler.OneCycleLR(

optimizer,

max_lr=self.max_lr,

steps_per_epoch=1,

epochs=self.max_iter

)

# Loss Function

try:

loss_fn = self.criterion(log_input=False).to(self.target_device) # Pytorch loss function

except TypeError:

loss_fn = self.criterion # Custom loss function

best_loss = float('inf') # set to infinity initially

if self.batch_function is not None:

X_tensor_batch, y_tensor_batch = self.batch_function(X_tensor, y_tensor)

else:

X_tensor_batch, y_tensor_batch = X_tensor, y_tensor

# Training loop

for epoch in range(self.max_iter): # Repeat max_iter times

self.module_.train()

y_pred = self.module_(X_tensor_batch) # Apply current model

loss = loss_fn(y_pred, y_tensor_batch) # What is the loss on it?

if self.l1_penalty > 0.0: # Lasso penalty

loss += self.l1_penalty * sum(

[

w.abs().sum()

for p, w in self.module_.named_parameters()

if p in self.l1_applies_params

]

)

if self.keep_best_model & (loss.item() < best_loss):

best_loss = loss.item()

self.best_model = self.module_.state_dict()

optimizer.zero_grad() # Reset optimizer

loss.backward() # Apply back propagation

# gradient norm clipping

if self.clip_value is not None:

grad_norm = torch.nn.utils.clip_grad_norm_(self.module_.parameters(), self.clip_value)

# check if gradients have been clipped

if (self.verbose >= 2) & (grad_norm > self.clip_value):

print(f'Gradient norms have been clipped in epoch {epoch}, value before clipping: {grad_norm}')

optimizer.step() # Update model parameters

scheduler.step()

if torch.isnan(loss.data).tolist():

raise ValueError('Error: nan loss')

# Every self.print_loss_every_iter steps, print RMSE

if (epoch % self.print_loss_every_iter == 0) and (self.verbose > 0):

self.module_.eval() # Eval mode

self.module_.point_estimates=True # Distributional models - set to point

y_pred_point = self.module_(X_tensor) # Get "real" model estimates

assert(y_pred_point.size() == y_tensor.size())

rmse = torch.sqrt(torch.mean(torch.square(y_pred_point - y_tensor)))

self.module_.train() # back to training

self.module_.point_estimates=False # Distributional models - set to point

print("Train RMSE: ", rmse.data.tolist(), " Train Loss: ", loss.data.tolist())

if (self.batch_function is not None) & (epoch % self.rebatch_every_iter == 0):

print(f"refreshing batch on epoch {epoch}")

X_tensor_batch, y_tensor_batch = self.batch_function(X_tensor, y_tensor)

if self.keep_best_model:

self.module_.load_state_dict(self.best_model)

self.module_.eval()

# Return the regressor

return self

def predict(self, X, point_estimates=True):

# Checks

check_is_fitted(self) # Check is fit had been called

X = check_array(X) # Check input

# Convert to Pytorch Tensor

X_tensor = torch.from_numpy(self.fix_array(X)).to(self.target_device)

self.module_.eval() # Eval (prediction) mode

self.module_.point_estimates = point_estimates

# Apply current model and convert back to numpy

if point_estimates:

y_pred = self.module_(X_tensor).cpu().detach().numpy()

if y_pred.shape[-1] == 1:

return y_pred.ravel()

else:

return y_pred

else:

y_pred = self.module_(X_tensor)

return y_pred

def score(self, X, y):

# Negative RMSE score (higher needs to be better)

y_pred = self.predict(X)

y = self.fix_array(y)

return -np.sqrt(np.mean((y_pred - y)**2))class ColumnKeeper(BaseEstimator, TransformerMixin):

"""Keeps named cols, preserves DataFrame output"""

def __init__(self, cols):

self.cols = cols

def fit(self, X, y):

return self

def transform(self, X):

return X.copy()[self.cols]Data

Convert to a time series with one record per time period (even if multiple or no claims transactions). This follows my earlier gist here but rewritten in pure pandas (shorter, less dependencies, but probably less readable so refer to gist for the concepts).

paid = pd.read_csv(f"https://raw.githubusercontent.com/agi-lab/SPLICE/main/datasets/complexity_{dataset_no}/payment_{dataset_no}.csv")

incurred = pd.read_csv(f"https://raw.githubusercontent.com/agi-lab/SPLICE/main/datasets/complexity_{dataset_no}/incurred_{dataset_no}.csv")

# Recreate some of the columns from paid into the incurred set

incurred.loc[:, "trn_no"] = incurred.groupby("claim_no").cumcount() + 1

incurred.loc[incurred.txn_type.str.contains("P"), "pmt_no"] = incurred.loc[incurred.txn_type.str.contains("P")].groupby("claim_no").cumcount() + 1

incurred["payment_period"] = np.ceil(incurred.txn_time).astype("int")

incurred["payment_size"] = incurred.groupby("claim_no").cumpaid.diff()

incurred["payment_size"] = incurred["payment_size"].fillna(incurred["cumpaid"])

incurred["incurred_incremental"] = incurred.groupby("claim_no").incurred.diff()

incurred["incurred_incremental"] = incurred["incurred_incremental"].fillna(incurred["incurred"])

transactions = incurred.merge(

paid[["claim_no", "occurrence_period", "occurrence_time", "notidel", "setldel"]].drop_duplicates(),

on="claim_no",

)

transactions["noti_period"] = np.ceil(transactions["occurrence_time"] + transactions["notidel"]).astype('int')

transactions["settle_period"] = np.ceil(transactions["occurrence_time"] + transactions["notidel"] + transactions["setldel"]).astype('int')

# Apply cut-off since some of the logic in this notebook assumes an equal set of dimensions

transactions["development_period"] = np.minimum(transactions["payment_period"] - transactions["occurrence_period"], cutoff)

num_dev_periods = cutoff - 1 # (transactions["payment_period"] - transactions["occurrence_period"]).max()

transactions.head()| Unnamed: 0 | claim_no | claim_size | txn_time | txn_delay | txn_type | incurred | OCL | cumpaid | multiplier | ... | payment_period | payment_size | incurred_incremental | occurrence_period | occurrence_time | notidel | setldel | noti_period | settle_period | development_period | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 232,310.09 | 1.87 | 0.00 | Ma | 31,291.71 | 31,291.71 | 0.00 | 1.00 | ... | 2 | 0.00 | 31,291.71 | 1 | 0.73 | 1.13 | 32.37 | 2 | 35 | 1 |

| 1 | 2 | 1 | 232,310.09 | 7.35 | 5.49 | P | 31,291.71 | 17,575.20 | 13,716.51 | NaN | ... | 8 | 13,716.51 | 0.00 | 1 | 0.73 | 1.13 | 32.37 | 2 | 35 | 7 |

| 2 | 3 | 1 | 232,310.09 | 13.99 | 12.12 | PMi | 32,133.97 | 1,606.70 | 30,527.27 | 0.94 | ... | 14 | 16,810.76 | 842.26 | 1 | 0.73 | 1.13 | 32.37 | 2 | 35 | 13 |

| 3 | 4 | 1 | 232,310.09 | 14.23 | 12.36 | Ma | 133,072.86 | 102,545.59 | 30,527.27 | 7.75 | ... | 15 | 0.00 | 100,938.89 | 1 | 0.73 | 1.13 | 32.37 | 2 | 35 | 14 |

| 4 | 5 | 1 | 232,310.09 | 14.41 | 12.54 | Ma | 314,565.32 | 284,038.05 | 30,527.27 | 2.36 | ... | 15 | 0.00 | 181,492.46 | 1 | 0.73 | 1.13 | 32.37 | 2 | 35 | 14 |

5 rows × 22 columns

# Transactions summarised by claim/dev:

transactions_group = (transactions

.groupby(["claim_no", "development_period"], as_index=False)

.agg({"payment_size": "sum", "incurred_incremental": "sum", "pmt_no": "max", "trn_no": "max"})

.sort_values(by=["claim_no", "development_period"])

)

# This is varied from the original version:

range_payment_delay = pd.DataFrame.from_dict({"development_period": range(0, num_dev_periods + 1)})

# Claims header + development periods

claim_head_expand_dev = (

transactions

.loc[:, ["claim_no", "occurrence_period", "occurrence_time", "noti_period", "notidel", "settle_period"]]

.drop_duplicates()

).merge(

range_payment_delay,

how="cross"

).assign(

payment_period=lambda df: (df.occurrence_period + df.development_period),

is_settled=lambda df: (df.occurrence_period + df.development_period) >= df.settle_period

)

# create the dataset

dat = claim_head_expand_dev.merge(

transactions_group,

how="left",

on=["claim_no", "development_period"],

).fillna(0)

# Only periods after notification

dat = dat.loc[dat.payment_period >= dat.noti_period]

# Clean close to zero values

dat["payment_size"] = np.where(abs(dat.payment_size) < 1e-2, 0.0, dat.payment_size)

# Cumulative payments

dat["payment_size_cumulative"] = dat[["claim_no", "payment_size"]].groupby('claim_no').cumsum()

dat["incurred_cumulative"] = dat[["claim_no", "incurred_incremental"]].groupby('claim_no').cumsum()

dat["outstanding_claims"] = dat["incurred_cumulative"] - dat["payment_size_cumulative"]

dat["outstanding_claims"] = np.where(abs(dat.outstanding_claims) < 1e-2, 0.0, dat.outstanding_claims)

dat["payment_to_prior_period"] = dat["payment_size_cumulative"] - dat["payment_size"]

dat["has_payment_to_prior_period"] = np.where(dat.payment_to_prior_period > 1e-2, 1, 0)

dat["log1_payment_to_prior_period"] = np.log1p(dat.payment_to_prior_period)

dat["incurred_to_prior_period"] = dat["incurred_cumulative"] - dat["incurred_incremental"]

dat["has_incurred_to_prior_period"] = np.where(dat.incurred_to_prior_period > 1e-2, 1, 0)

dat["log1_incurred_to_prior_period"] = np.log1p(dat.incurred_to_prior_period)

dat["outstanding_to_prior_period"] = dat["incurred_to_prior_period"] - dat["payment_to_prior_period"]

dat["outstanding_to_prior_period"] = np.where(abs(dat.outstanding_to_prior_period) < 1e-2, 0.0, dat.outstanding_to_prior_period)

dat["log1_outstanding_to_prior_period"] = np.log1p(dat.outstanding_to_prior_period)

dat["has_outstanding_to_prior_period"] = np.where(dat.outstanding_to_prior_period > 1e-2, 1, 0)

dat["pmt_no"] = dat.groupby("claim_no")["pmt_no"].cummax()

dat["trn_no"] = dat.groupby("claim_no")["trn_no"].cummax()

dat["payment_count_to_prior_period"] = dat.groupby("claim_no")["pmt_no"].shift(1).fillna(0)

dat["transaction_count_to_prior_period"] = dat.groupby("claim_no")["trn_no"].shift(1).fillna(0)

dat["data_as_at_development_period"] = dat.development_period # See data augmentation section

dat["backdate_periods"] = 0

dat["payment_period_as_at"] = dat.payment_period

# Potential features for model later:

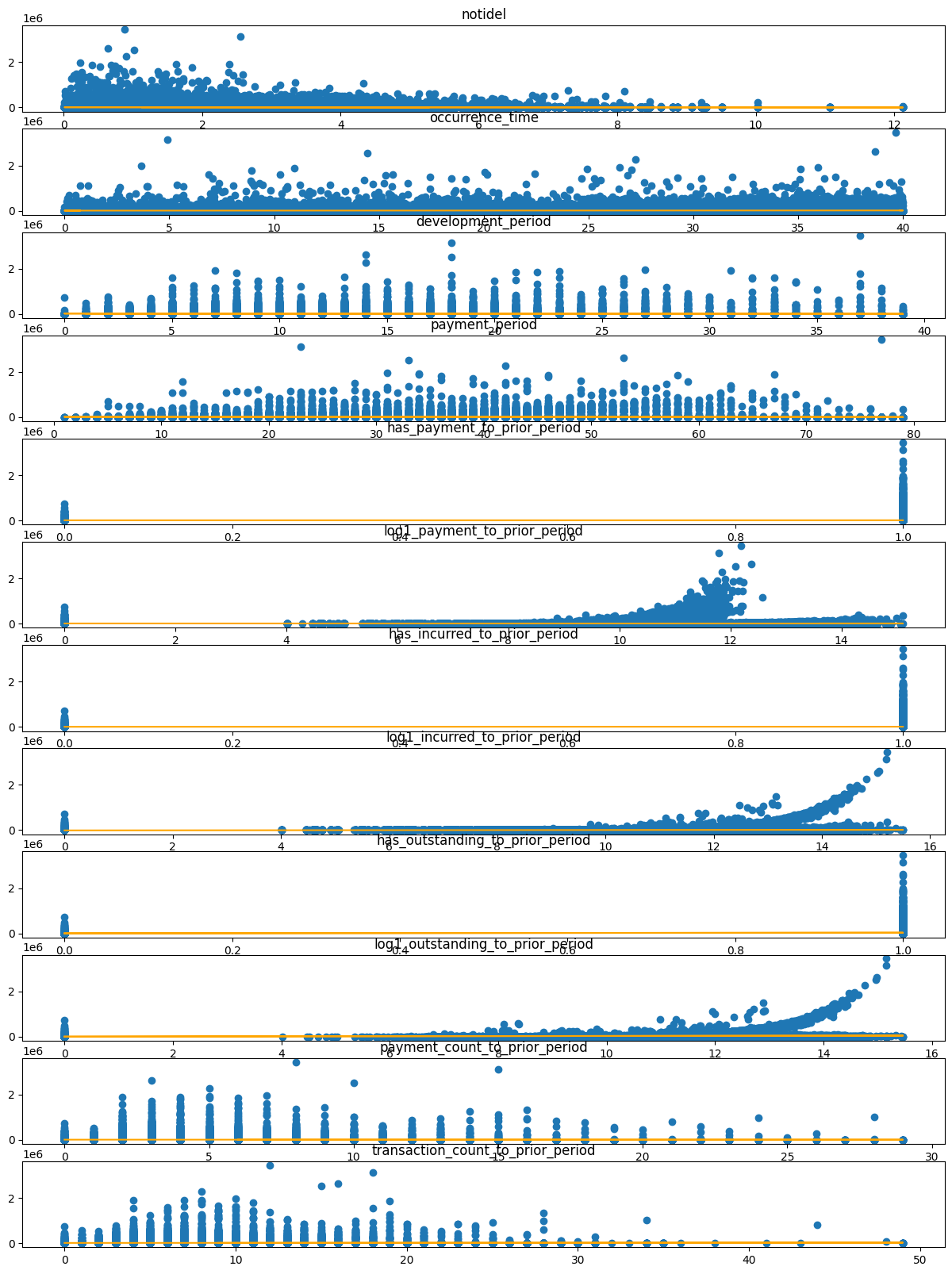

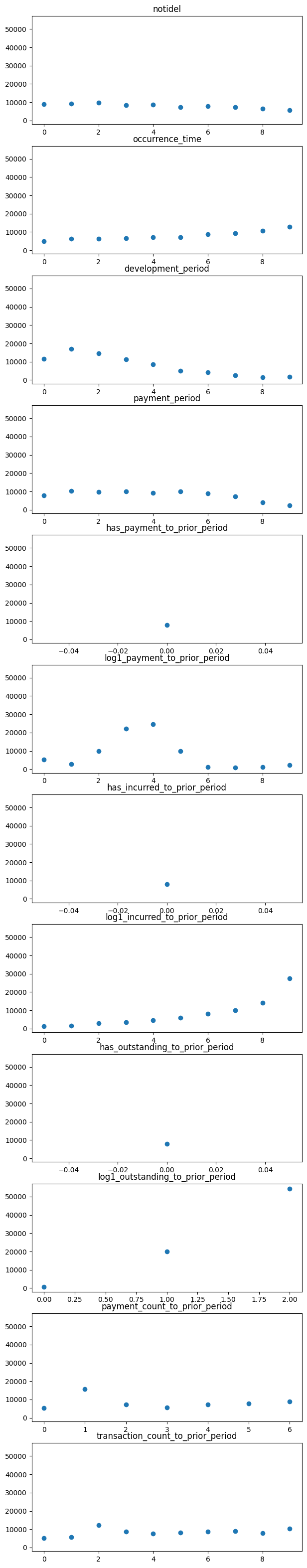

data_cols = [

"notidel",

"occurrence_time",

"development_period",

"payment_period",

"has_payment_to_prior_period",

"log1_payment_to_prior_period",

"has_incurred_to_prior_period",

"log1_incurred_to_prior_period",

"has_outstanding_to_prior_period",

"log1_outstanding_to_prior_period",

"payment_count_to_prior_period",

"transaction_count_to_prior_period"]

# The notebook is created on an MacBook Pro M1, which supports GPU mode in float32 only.

dat[dat.select_dtypes(np.float64).columns] = dat.select_dtypes(np.float64).astype(np.float32)

dat["train_ind"] = (dat.payment_period <= cutoff)

dat["cv_ind"] = dat.payment_period % 5 # Cross validate on this column

dat| claim_no | occurrence_period | occurrence_time | noti_period | notidel | settle_period | development_period | payment_period | is_settled | payment_size | ... | outstanding_to_prior_period | log1_outstanding_to_prior_period | has_outstanding_to_prior_period | payment_count_to_prior_period | transaction_count_to_prior_period | data_as_at_development_period | backdate_periods | payment_period_as_at | train_ind | cv_ind | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 1 | 2 | False | 0.00 | ... | 0.00 | 0.00 | 0 | 0.00 | 0.00 | 1 | 0 | 2 | True | 2 |

| 2 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 2 | 3 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 2 | 0 | 3 | True | 3 |

| 3 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 3 | 4 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 3 | 0 | 4 | True | 4 |

| 4 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 4 | 5 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 4 | 0 | 5 | True | 0 |

| 5 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 5 | 6 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 5 | 0 | 6 | True | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 146515 | 3663 | 40 | 39.87 | 46 | 5.27 | 56 | 35 | 75 | True | 0.00 | ... | 0.00 | 0.00 | 0 | 6.00 | 9.00 | 35 | 0 | 75 | False | 0 |

| 146516 | 3663 | 40 | 39.87 | 46 | 5.27 | 56 | 36 | 76 | True | 0.00 | ... | 0.00 | 0.00 | 0 | 6.00 | 9.00 | 36 | 0 | 76 | False | 1 |

| 146517 | 3663 | 40 | 39.87 | 46 | 5.27 | 56 | 37 | 77 | True | 0.00 | ... | 0.00 | 0.00 | 0 | 6.00 | 9.00 | 37 | 0 | 77 | False | 2 |

| 146518 | 3663 | 40 | 39.87 | 46 | 5.27 | 56 | 38 | 78 | True | 0.00 | ... | 0.00 | 0.00 | 0 | 6.00 | 9.00 | 38 | 0 | 78 | False | 3 |

| 146519 | 3663 | 40 | 39.87 | 46 | 5.27 | 56 | 39 | 79 | True | 0.00 | ... | 0.00 | 0.00 | 0 | 6.00 | 9.00 | 39 | 0 | 79 | False | 4 |

138644 rows × 32 columns

dat.head(10)| claim_no | occurrence_period | occurrence_time | noti_period | notidel | settle_period | development_period | payment_period | is_settled | payment_size | ... | outstanding_to_prior_period | log1_outstanding_to_prior_period | has_outstanding_to_prior_period | payment_count_to_prior_period | transaction_count_to_prior_period | data_as_at_development_period | backdate_periods | payment_period_as_at | train_ind | cv_ind | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 1 | 2 | False | 0.00 | ... | 0.00 | 0.00 | 0 | 0.00 | 0.00 | 1 | 0 | 2 | True | 2 |

| 2 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 2 | 3 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 2 | 0 | 3 | True | 3 |

| 3 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 3 | 4 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 3 | 0 | 4 | True | 4 |

| 4 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 4 | 5 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 4 | 0 | 5 | True | 0 |

| 5 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 5 | 6 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 5 | 0 | 6 | True | 1 |

| 6 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 6 | 7 | False | 0.00 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 6 | 0 | 7 | True | 2 |

| 7 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 7 | 8 | False | 13,716.51 | ... | 31,291.71 | 10.35 | 1 | 0.00 | 1.00 | 7 | 0 | 8 | True | 3 |

| 8 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 8 | 9 | False | 0.00 | ... | 17,575.20 | 9.77 | 1 | 1.00 | 2.00 | 8 | 0 | 9 | True | 4 |

| 9 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 9 | 10 | False | 0.00 | ... | 17,575.20 | 9.77 | 1 | 1.00 | 2.00 | 9 | 0 | 10 | True | 0 |

| 10 | 1 | 1 | 0.73 | 2 | 1.13 | 35 | 10 | 11 | False | 0.00 | ... | 17,575.20 | 9.77 | 1 | 1.00 | 2.00 | 10 | 0 | 11 | True | 1 |

10 rows × 32 columns

Train test split is set up by payment period - past vs future.

There is a caveat for an unrealistic assumption - there is one record per claim even if it is reported “in the future” past the cutoff.

This affects the model training and it does mean when looking at aggregate prediction data that the model knows what the ultimate count is.

However, normally when we are building individual claim models they are used to predict expected costs of known claims so this is not a huge issue.

Chain Ladder, Direct Calculation, Aggregated Data

Chain ladder calculation, from first principles. Based on this article

triangle = (dat

.groupby(["occurrence_period", "development_period", "payment_period"], as_index=False)

.agg({"payment_size_cumulative": "sum", "payment_size": "sum"})

.sort_values(by=["occurrence_period", "development_period"])

)

triangle_train = triangle.loc[triangle.payment_period <= cutoff]

triangle_test = triangle.loc[triangle.payment_period > cutoff]

triangle_train.pivot(index = "occurrence_period", columns = "development_period", values = "payment_size_cumulative")| development_period | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 30 | 31 | 32 | 33 | 34 | 35 | 36 | 37 | 38 | 39 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| occurrence_period | |||||||||||||||||||||

| 1 | 0.00 | 31,551.25 | 152,793.12 | 680,415.06 | 1,650,518.75 | 1,932,605.62 | 2,602,592.25 | 3,222,089.50 | 3,775,028.25 | 4,788,738.50 | ... | 15,739,832.00 | 16,082,119.00 | 16,780,246.00 | 17,400,658.00 | 17,422,826.00 | 17,422,826.00 | 17,485,892.00 | 17,485,892.00 | 17,485,892.00 | 17,485,892.00 |

| 2 | 10,286.75 | 49,493.38 | 132,020.67 | 430,670.75 | 1,225,081.75 | 2,044,801.12 | 2,433,025.00 | 2,808,325.75 | 3,586,153.00 | 4,218,801.50 | ... | 14,386,516.00 | 14,880,894.00 | 15,342,595.00 | 15,378,178.00 | 15,378,178.00 | 15,409,743.00 | 15,409,743.00 | 15,433,019.00 | 15,795,103.00 | NaN |

| 3 | 0.00 | 62,389.38 | 640,980.81 | 987,640.00 | 1,536,782.25 | 2,385,404.00 | 3,177,442.25 | 3,698,036.50 | 3,918,063.50 | 4,333,062.00 | ... | 15,336,449.00 | 15,437,039.00 | 15,646,281.00 | 15,867,258.00 | 16,067,564.00 | 16,067,564.00 | 16,071,147.00 | 16,108,434.00 | NaN | NaN |

| 4 | 5,646.32 | 63,203.44 | 245,984.03 | 584,083.62 | 1,030,191.31 | 2,371,411.75 | 3,179,435.75 | 4,964,554.00 | 6,244,163.00 | 6,612,675.00 | ... | 17,058,208.00 | 17,107,564.00 | 17,202,888.00 | 17,256,650.00 | 17,256,650.00 | 17,598,124.00 | 18,155,534.00 | NaN | NaN | NaN |

| 5 | 0.00 | 22,911.55 | 177,779.52 | 431,647.50 | 872,805.94 | 1,619,887.00 | 2,862,273.00 | 3,462,464.75 | 4,956,769.50 | 6,237,362.50 | ... | 19,066,434.00 | 19,121,178.00 | 19,157,450.00 | 19,222,538.00 | 19,222,538.00 | 19,235,524.00 | NaN | NaN | NaN | NaN |

| 6 | 2,259.24 | 62,889.91 | 357,107.84 | 813,191.12 | 1,218,815.75 | 2,030,866.88 | 3,065,646.00 | 4,652,615.50 | 5,097,719.50 | 6,097,211.00 | ... | 21,011,932.00 | 21,511,248.00 | 21,511,248.00 | 21,511,248.00 | 21,611,440.00 | NaN | NaN | NaN | NaN | NaN |

| 7 | 25,200.05 | 212,996.06 | 303,872.44 | 522,915.66 | 1,180,820.88 | 3,314,907.25 | 3,931,794.25 | 4,110,920.00 | 5,112,089.00 | 5,522,927.00 | ... | 17,723,798.00 | 18,120,490.00 | 18,120,490.00 | 18,526,526.00 | NaN | NaN | NaN | NaN | NaN | NaN |

| 8 | 0.00 | 101,324.27 | 482,201.94 | 1,302,672.88 | 1,762,479.38 | 2,816,009.75 | 3,599,199.00 | 4,404,539.50 | 5,549,806.50 | 6,296,549.50 | ... | 27,771,816.00 | 27,771,816.00 | 27,839,500.00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 9 | 0.00 | 21,563.11 | 207,902.11 | 1,019,339.94 | 1,467,485.12 | 2,183,192.50 | 2,792,763.75 | 3,155,922.75 | 4,188,714.25 | 5,294,733.50 | ... | 19,726,484.00 | 19,798,286.00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 10 | 0.00 | 62,438.00 | 642,477.19 | 1,220,443.88 | 1,512,127.75 | 2,125,860.75 | 3,205,997.25 | 5,542,220.00 | 6,233,909.50 | 7,278,581.00 | ... | 23,628,224.00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 11 | 6,573.56 | 233,421.70 | 858,658.38 | 1,487,226.75 | 2,326,138.75 | 4,001,959.50 | 4,689,719.00 | 5,703,576.50 | 7,421,133.00 | 8,338,611.50 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 12 | 0.00 | 337,785.12 | 602,649.94 | 947,861.81 | 1,960,324.38 | 2,438,331.25 | 3,263,283.25 | 4,443,729.00 | 4,600,126.00 | 4,950,643.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 13 | 0.00 | 48,432.94 | 257,028.44 | 713,263.12 | 1,489,202.25 | 2,399,702.50 | 3,479,596.75 | 4,570,094.00 | 5,228,066.00 | 8,120,785.50 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 14 | 18,463.75 | 352,189.09 | 1,015,863.62 | 1,451,495.75 | 2,553,104.25 | 3,266,427.50 | 5,020,334.00 | 6,462,674.00 | 8,515,544.00 | 9,761,134.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 15 | 0.00 | 64,434.53 | 272,821.25 | 689,155.88 | 1,832,749.12 | 3,660,129.75 | 4,680,136.50 | 7,358,847.00 | 9,028,388.00 | 10,283,629.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 16 | 172,118.23 | 276,003.81 | 1,468,529.00 | 2,473,900.50 | 3,320,296.25 | 4,172,269.25 | 6,421,418.50 | 7,663,898.50 | 9,201,556.00 | 9,852,889.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 17 | 0.00 | 115,323.28 | 533,563.88 | 1,628,512.75 | 3,782,490.00 | 5,496,876.00 | 7,221,503.00 | 8,019,986.00 | 8,644,119.00 | 9,252,501.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 18 | 0.00 | 113,693.24 | 534,180.81 | 1,126,199.38 | 2,086,124.12 | 2,636,258.50 | 3,415,784.25 | 4,550,892.50 | 6,384,181.50 | 7,276,585.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 19 | 0.00 | 65,176.80 | 593,353.38 | 1,377,092.75 | 2,679,311.25 | 4,561,606.50 | 5,190,826.50 | 6,896,302.50 | 7,889,414.50 | 10,040,150.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 20 | 3,269.31 | 303,522.59 | 789,422.50 | 1,556,370.50 | 2,431,901.50 | 4,251,400.00 | 6,718,960.50 | 9,005,605.00 | 10,659,464.00 | 12,031,561.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 21 | 0.00 | 99,668.09 | 1,164,366.00 | 1,829,524.38 | 3,262,251.50 | 4,136,417.75 | 5,829,693.00 | 7,624,765.00 | 9,753,255.00 | 11,093,190.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 22 | 0.00 | 73,295.09 | 370,612.00 | 1,025,605.56 | 2,284,124.00 | 3,889,548.75 | 5,347,188.00 | 6,029,389.00 | 7,281,124.50 | 8,422,002.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 23 | 738,959.81 | 944,850.44 | 1,558,499.88 | 2,354,515.50 | 4,080,220.25 | 6,339,416.00 | 7,833,012.00 | 9,289,169.00 | 11,375,192.00 | 12,184,491.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 24 | 32,878.80 | 139,590.50 | 722,637.12 | 2,000,021.75 | 3,699,119.00 | 4,275,938.50 | 5,317,856.00 | 7,233,627.50 | 8,291,626.50 | 8,943,028.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 25 | 0.00 | 67,300.14 | 794,740.44 | 1,203,488.25 | 1,704,533.00 | 2,902,497.25 | 3,623,180.75 | 4,985,555.50 | 6,397,038.50 | 7,434,320.50 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 26 | 0.00 | 222,579.27 | 724,894.44 | 1,461,998.50 | 2,063,614.62 | 3,727,648.00 | 6,192,158.00 | 7,523,790.50 | 8,246,265.50 | 10,140,250.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 27 | 0.00 | 21,265.95 | 431,409.00 | 1,352,109.50 | 2,414,385.00 | 3,756,162.50 | 4,874,574.00 | 8,397,044.00 | 10,588,447.00 | 11,796,448.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 28 | 0.00 | 281,462.16 | 1,313,574.88 | 2,223,132.25 | 3,606,104.75 | 5,205,782.00 | 8,583,559.00 | 9,386,136.00 | 12,726,494.00 | 14,633,823.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 29 | 109,492.41 | 749,180.50 | 2,196,492.25 | 3,589,296.25 | 5,510,728.00 | 7,582,901.50 | 9,060,910.00 | 12,036,241.00 | 14,089,931.00 | 16,412,483.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 30 | 0.00 | 152,130.56 | 1,171,098.38 | 2,392,989.25 | 4,038,955.50 | 5,796,675.50 | 6,487,593.00 | 7,935,940.00 | 9,448,145.00 | 12,658,112.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 31 | 0.00 | 102,149.25 | 587,694.50 | 1,739,223.50 | 3,054,168.25 | 4,391,554.00 | 6,488,115.50 | 8,532,481.00 | 9,589,075.00 | 13,596,397.00 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 32 | 0.00 | 137,841.00 | 841,636.31 | 1,810,914.25 | 3,203,533.50 | 4,339,657.00 | 7,193,541.50 | 8,792,350.00 | 11,048,168.00 | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 33 | 0.00 | 134,954.59 | 1,254,893.38 | 2,674,034.25 | 4,403,876.00 | 5,591,899.50 | 6,810,711.50 | 8,387,086.00 | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 34 | 38,893.38 | 652,741.81 | 1,456,564.38 | 2,050,356.00 | 3,044,004.00 | 4,796,483.00 | 7,570,764.00 | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 35 | 0.00 | 167,482.09 | 926,322.88 | 2,872,798.50 | 5,040,902.00 | 6,421,425.50 | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 36 | 21,154.29 | 320,832.66 | 1,568,433.12 | 2,393,324.00 | 4,121,508.50 | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 37 | 83,818.48 | 819,248.56 | 1,881,916.25 | 3,489,761.75 | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 38 | 28,038.49 | 216,292.89 | 1,041,738.56 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 39 | 0.00 | 237,799.91 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 40 | 0.00 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

40 rows × 40 columns

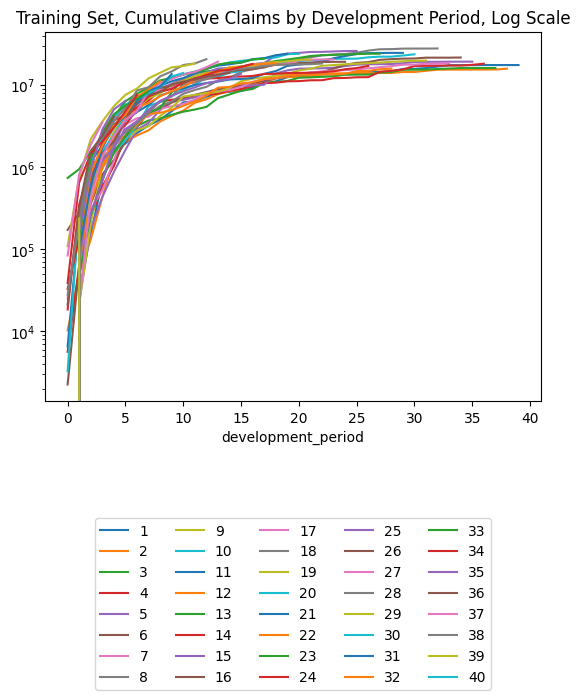

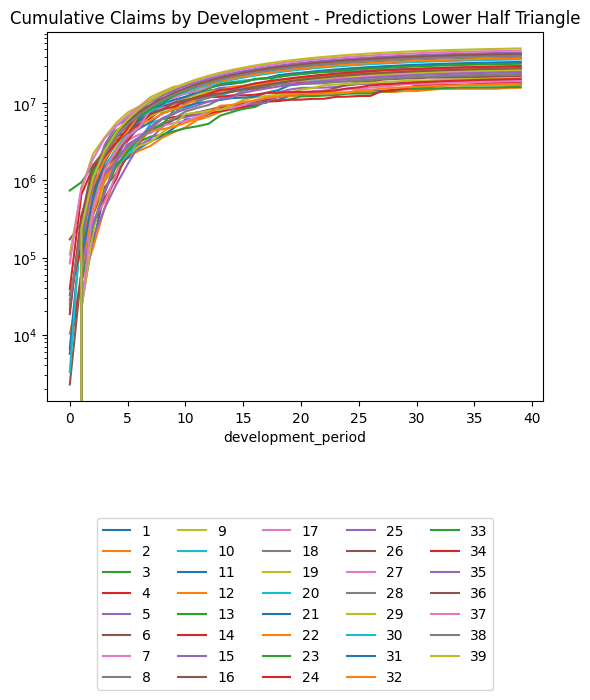

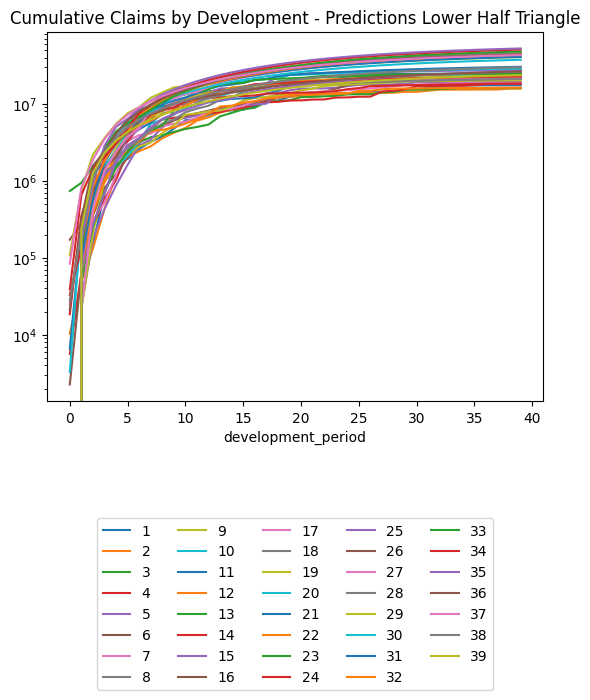

(triangle_train

.pivot(index = "development_period", columns = "occurrence_period", values = "payment_size_cumulative")

.plot(logy=True)

)

plt.legend(loc="lower center", bbox_to_anchor=(0.5, -0.8), ncol=5)

plt.title("Training Set, Cumulative Claims by Development Period, Log Scale")Text(0.5, 1.0, 'Training Set, Cumulative Claims by Development Period, Log Scale')

### Get the diagonals in the cumulative IBNR triangle, which represents cumulative payments for a particular

### occurrence period

triangle_diagonal_interim = triangle['payment_size_cumulative'][triangle['payment_period'] == cutoff].reset_index(drop = True)

triangle_diagonal_interim = pd.DataFrame(triangle_diagonal_interim).rename(columns = {'payment_size_cumulative': 'diagonal'})

triangle_diagonal = triangle_diagonal_interim.iloc[::-1].reset_index(drop = True)

### Sum cumulative payments by development period - to be used to calculate CDFs later

development_period_sum_interim = triangle_train.groupby(by = 'development_period').sum()

development_period_sum = development_period_sum_interim['payment_size_cumulative'].reset_index(drop = True)

development_period_sum = pd.DataFrame(development_period_sum).rename(columns = {'payment_size_cumulative': 'dev_period_sum'})

# Merge two dataframes

df_cdf = pd.concat([triangle_diagonal, development_period_sum], axis = 1)

## dev_period_sum_alt column is to ensure the claims for two consecutive periods have the same number

## of levels/elements (and division of these two claims columns give the CDF)

df_cdf['dev_period_sum_alt'] = df_cdf['dev_period_sum'] - df_cdf['diagonal']

### Calculate Development Factors

df_cdf['dev_period_sum_shift'] = df_cdf['dev_period_sum'].shift(-1)

df_cdf['development_factor'] = df_cdf['dev_period_sum_shift'] / df_cdf['dev_period_sum_alt']

df_cdf['factor_to_ultimate'] = df_cdf.sort_index(ascending=False).fillna(1.0).development_factor.cumprod()

df_cdf['percentage_of_ultimate'] = 1 / df_cdf['factor_to_ultimate']

df_cdf['incr_perc_of_ultimate'] = df_cdf['percentage_of_ultimate'].diff()

df_cdf| diagonal | dev_period_sum | dev_period_sum_alt | dev_period_sum_shift | development_factor | factor_to_ultimate | percentage_of_ultimate | incr_perc_of_ultimate | |

|---|---|---|---|---|---|---|---|---|

| 0 | 0.00 | 1,297,052.88 | 1,297,052.88 | 8,141,409.00 | 6.28 | 923.86 | 0.00 | NaN |

| 1 | 237,799.91 | 8,141,409.00 | 7,903,609.00 | 30,276,714.00 | 3.83 | 147.19 | 0.01 | 0.01 |

| 2 | 1,041,738.56 | 30,276,714.00 | 29,234,976.00 | 57,907,192.00 | 1.98 | 38.42 | 0.03 | 0.02 |

| 3 | 3,489,761.75 | 57,907,192.00 | 54,417,432.00 | 93,450,776.00 | 1.72 | 19.40 | 0.05 | 0.03 |

| 4 | 4,121,508.50 | 93,450,776.00 | 89,329,264.00 | 132,863,912.00 | 1.49 | 11.30 | 0.09 | 0.04 |

| 5 | 6,421,425.50 | 132,863,912.00 | 126,442,488.00 | 172,164,592.00 | 1.36 | 7.59 | 0.13 | 0.04 |

| 6 | 7,570,764.00 | 172,164,592.00 | 164,593,824.00 | 210,850,864.00 | 1.28 | 5.58 | 0.18 | 0.05 |

| 7 | 8,387,086.00 | 210,850,864.00 | 202,463,776.00 | 245,069,168.00 | 1.21 | 4.35 | 0.23 | 0.05 |

| 8 | 11,048,168.00 | 245,069,168.00 | 234,020,992.00 | 273,903,680.00 | 1.17 | 3.60 | 0.28 | 0.05 |

| 9 | 13,596,397.00 | 273,903,680.00 | 260,307,280.00 | 298,338,624.00 | 1.15 | 3.07 | 0.33 | 0.05 |

| 10 | 13,984,095.00 | 298,338,624.00 | 284,354,528.00 | 311,596,960.00 | 1.10 | 2.68 | 0.37 | 0.05 |

| 11 | 18,247,140.00 | 311,596,960.00 | 293,349,824.00 | 324,199,360.00 | 1.11 | 2.45 | 0.41 | 0.04 |

| 12 | 20,606,538.00 | 324,199,360.00 | 303,592,832.00 | 331,724,640.00 | 1.09 | 2.21 | 0.45 | 0.04 |

| 13 | 19,192,766.00 | 331,724,640.00 | 312,531,872.00 | 335,702,112.00 | 1.07 | 2.03 | 0.49 | 0.04 |

| 14 | 14,057,512.00 | 335,702,112.00 | 321,644,608.00 | 340,176,128.00 | 1.06 | 1.89 | 0.53 | 0.04 |

| 15 | 13,656,915.00 | 340,176,128.00 | 326,519,200.00 | 348,353,504.00 | 1.07 | 1.78 | 0.56 | 0.03 |

| 16 | 18,047,780.00 | 348,353,504.00 | 330,305,728.00 | 348,470,784.00 | 1.05 | 1.67 | 0.60 | 0.04 |

| 17 | 20,920,498.00 | 348,470,784.00 | 327,550,272.00 | 350,782,240.00 | 1.07 | 1.58 | 0.63 | 0.03 |

| 18 | 18,409,002.00 | 350,782,240.00 | 332,373,248.00 | 350,120,704.00 | 1.05 | 1.48 | 0.68 | 0.04 |

| 19 | 23,882,838.00 | 350,120,704.00 | 326,237,856.00 | 338,951,584.00 | 1.04 | 1.40 | 0.71 | 0.04 |

| 20 | 23,967,498.00 | 338,951,584.00 | 314,984,096.00 | 322,580,384.00 | 1.02 | 1.35 | 0.74 | 0.03 |

| 21 | 20,525,112.00 | 322,580,384.00 | 302,055,264.00 | 309,189,504.00 | 1.02 | 1.32 | 0.76 | 0.02 |

| 22 | 18,259,918.00 | 309,189,504.00 | 290,929,600.00 | 298,672,864.00 | 1.03 | 1.29 | 0.78 | 0.02 |

| 23 | 20,473,130.00 | 298,672,864.00 | 278,199,744.00 | 285,398,368.00 | 1.03 | 1.26 | 0.80 | 0.02 |

| 24 | 19,147,944.00 | 285,398,368.00 | 266,250,432.00 | 273,799,296.00 | 1.03 | 1.22 | 0.82 | 0.02 |

| 25 | 25,963,162.00 | 273,799,296.00 | 247,836,128.00 | 253,648,528.00 | 1.02 | 1.19 | 0.84 | 0.02 |

| 26 | 17,040,986.00 | 253,648,528.00 | 236,607,536.00 | 243,831,648.00 | 1.03 | 1.16 | 0.86 | 0.02 |

| 27 | 24,036,898.00 | 243,831,648.00 | 219,794,752.00 | 222,414,336.00 | 1.01 | 1.13 | 0.89 | 0.03 |

| 28 | 15,848,811.00 | 222,414,336.00 | 206,565,520.00 | 212,487,168.00 | 1.03 | 1.12 | 0.90 | 0.01 |

| 29 | 24,596,934.00 | 212,487,168.00 | 187,890,240.00 | 191,449,696.00 | 1.02 | 1.08 | 0.92 | 0.03 |

| 30 | 23,628,224.00 | 191,449,696.00 | 167,821,472.00 | 169,830,640.00 | 1.01 | 1.06 | 0.94 | 0.02 |

| 31 | 19,798,286.00 | 169,830,640.00 | 150,032,352.00 | 151,600,704.00 | 1.01 | 1.05 | 0.95 | 0.01 |

| 32 | 27,839,500.00 | 151,600,704.00 | 123,761,200.00 | 125,163,056.00 | 1.01 | 1.04 | 0.96 | 0.01 |

| 33 | 18,526,526.00 | 125,163,056.00 | 106,636,528.00 | 106,959,200.00 | 1.00 | 1.03 | 0.97 | 0.01 |

| 34 | 21,611,440.00 | 106,959,200.00 | 85,347,760.00 | 85,733,776.00 | 1.00 | 1.03 | 0.97 | 0.00 |

| 35 | 19,235,524.00 | 85,733,776.00 | 66,498,252.00 | 67,122,320.00 | 1.01 | 1.02 | 0.98 | 0.00 |

| 36 | 18,155,534.00 | 67,122,320.00 | 48,966,784.00 | 49,027,344.00 | 1.00 | 1.01 | 0.99 | 0.01 |

| 37 | 16,108,434.00 | 49,027,344.00 | 32,918,910.00 | 33,280,996.00 | 1.01 | 1.01 | 0.99 | 0.00 |

| 38 | 15,795,103.00 | 33,280,996.00 | 17,485,892.00 | 17,485,892.00 | 1.00 | 1.00 | 1.00 | 0.01 |

| 39 | 17,485,892.00 | 17,485,892.00 | 0.00 | NaN | NaN | 1.00 | 1.00 | 0.00 |

Apply dev factors with a loop for simplicity. This can also be done with joins but coding it gets complicated.

triangle_cl = triangle.sort_values(["occurrence_period", "development_period"]).copy()

for i in range(0, triangle_cl.shape[0]):

if triangle_cl.loc[i, "payment_period"] > cutoff:

d = triangle_cl.loc[i, "development_period"]

if d <= df_cdf.index.max():

dev_factor = df_cdf.loc[d-1, "development_factor"]

else:

dev_factor = 1

triangle_cl.loc[i, "payment_size_cumulative"] = (

triangle_cl.loc[i - 1, "payment_size_cumulative"] *

dev_factor

)triangle_cl.pivot(index = "occurrence_period", columns = "development_period", values = "payment_size_cumulative")| development_period | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 30 | 31 | 32 | 33 | 34 | 35 | 36 | 37 | 38 | 39 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| occurrence_period | |||||||||||||||||||||

| 1 | 0.00 | 31,551.25 | 152,793.12 | 680,415.06 | 1,650,518.75 | 1,932,605.62 | 2,602,592.25 | 3,222,089.50 | 3,775,028.25 | 4,788,738.50 | ... | 15,739,832.00 | 16,082,119.00 | 16,780,246.00 | 17,400,658.00 | 17,422,826.00 | 17,422,826.00 | 17,485,892.00 | 17,485,892.00 | 17,485,892.00 | 17,485,892.00 |

| 2 | 10,286.75 | 49,493.38 | 132,020.67 | 430,670.75 | 1,225,081.75 | 2,044,801.12 | 2,433,025.00 | 2,808,325.75 | 3,586,153.00 | 4,218,801.50 | ... | 14,386,516.00 | 14,880,894.00 | 15,342,595.00 | 15,378,178.00 | 15,378,178.00 | 15,409,743.00 | 15,409,743.00 | 15,433,019.00 | 15,795,103.00 | 15,795,103.00 |

| 3 | 0.00 | 62,389.38 | 640,980.81 | 987,640.00 | 1,536,782.25 | 2,385,404.00 | 3,177,442.25 | 3,698,036.50 | 3,918,063.50 | 4,333,062.00 | ... | 15,336,449.00 | 15,437,039.00 | 15,646,281.00 | 15,867,258.00 | 16,067,564.00 | 16,067,564.00 | 16,071,147.00 | 16,108,434.00 | 16,285,616.00 | 16,285,616.00 |

| 4 | 5,646.32 | 63,203.44 | 245,984.03 | 584,083.62 | 1,030,191.31 | 2,371,411.75 | 3,179,435.75 | 4,964,554.00 | 6,244,163.00 | 6,612,675.00 | ... | 17,058,208.00 | 17,107,564.00 | 17,202,888.00 | 17,256,650.00 | 17,256,650.00 | 17,598,124.00 | 18,155,534.00 | 18,177,988.00 | 18,377,934.00 | 18,377,934.00 |

| 5 | 0.00 | 22,911.55 | 177,779.52 | 431,647.50 | 872,805.94 | 1,619,887.00 | 2,862,273.00 | 3,462,464.75 | 4,956,769.50 | 6,237,362.50 | ... | 19,066,434.00 | 19,121,178.00 | 19,157,450.00 | 19,222,538.00 | 19,222,538.00 | 19,235,524.00 | 19,416,044.00 | 19,440,058.00 | 19,653,886.00 | 19,653,886.00 |

| 6 | 2,259.24 | 62,889.91 | 357,107.84 | 813,191.12 | 1,218,815.75 | 2,030,866.88 | 3,065,646.00 | 4,652,615.50 | 5,097,719.50 | 6,097,211.00 | ... | 21,011,932.00 | 21,511,248.00 | 21,511,248.00 | 21,511,248.00 | 21,611,440.00 | 21,709,186.00 | 21,912,922.00 | 21,940,024.00 | 22,181,350.00 | 22,181,350.00 |

| 7 | 25,200.05 | 212,996.06 | 303,872.44 | 522,915.66 | 1,180,820.88 | 3,314,907.25 | 3,931,794.25 | 4,110,920.00 | 5,112,089.00 | 5,522,927.00 | ... | 17,723,798.00 | 18,120,490.00 | 18,120,490.00 | 18,526,526.00 | 18,582,586.00 | 18,666,634.00 | 18,841,816.00 | 18,865,120.00 | 19,072,624.00 | 19,072,624.00 |

| 8 | 0.00 | 101,324.27 | 482,201.94 | 1,302,672.88 | 1,762,479.38 | 2,816,009.75 | 3,599,199.00 | 4,404,539.50 | 5,549,806.50 | 6,296,549.50 | ... | 27,771,816.00 | 27,771,816.00 | 27,839,500.00 | 28,154,842.00 | 28,240,036.00 | 28,367,764.00 | 28,633,988.00 | 28,669,402.00 | 28,984,746.00 | 28,984,746.00 |

| 9 | 0.00 | 21,563.11 | 207,902.11 | 1,019,339.94 | 1,467,485.12 | 2,183,192.50 | 2,792,763.75 | 3,155,922.75 | 4,188,714.25 | 5,294,733.50 | ... | 19,726,484.00 | 19,798,286.00 | 20,005,246.00 | 20,231,848.00 | 20,293,068.00 | 20,384,852.00 | 20,576,158.00 | 20,601,606.00 | 20,828,210.00 | 20,828,210.00 |

| 10 | 0.00 | 62,438.00 | 642,477.19 | 1,220,443.88 | 1,512,127.75 | 2,125,860.75 | 3,205,997.25 | 5,542,220.00 | 6,233,909.50 | 7,278,581.00 | ... | 23,628,224.00 | 23,911,102.00 | 24,161,056.00 | 24,434,732.00 | 24,508,668.00 | 24,619,518.00 | 24,850,566.00 | 24,881,302.00 | 25,154,980.00 | 25,154,980.00 |

| 11 | 6,573.56 | 233,421.70 | 858,658.38 | 1,487,226.75 | 2,326,138.75 | 4,001,959.50 | 4,689,719.00 | 5,703,576.50 | 7,421,133.00 | 8,338,611.50 | ... | 25,062,908.00 | 25,362,962.00 | 25,628,092.00 | 25,918,386.00 | 25,996,812.00 | 26,114,394.00 | 26,359,472.00 | 26,392,074.00 | 26,682,368.00 | 26,682,368.00 |

| 12 | 0.00 | 337,785.12 | 602,649.94 | 947,861.81 | 1,960,324.38 | 2,438,331.25 | 3,263,283.25 | 4,443,729.00 | 4,600,126.00 | 4,950,643.00 | ... | 16,612,005.00 | 16,810,886.00 | 16,986,618.00 | 17,179,028.00 | 17,231,010.00 | 17,308,944.00 | 17,471,384.00 | 17,492,992.00 | 17,685,404.00 | 17,685,404.00 |

| 13 | 0.00 | 48,432.94 | 257,028.44 | 713,263.12 | 1,489,202.25 | 2,399,702.50 | 3,479,596.75 | 4,570,094.00 | 5,228,066.00 | 8,120,785.50 | ... | 25,494,662.00 | 25,799,886.00 | 26,069,584.00 | 26,364,878.00 | 26,444,656.00 | 26,564,264.00 | 26,813,564.00 | 26,846,726.00 | 27,142,022.00 | 27,142,022.00 |

| 14 | 18,463.75 | 352,189.09 | 1,015,863.62 | 1,451,495.75 | 2,553,104.25 | 3,266,427.50 | 5,020,334.00 | 6,462,674.00 | 8,515,544.00 | 9,761,134.00 | ... | 18,626,320.00 | 18,849,316.00 | 19,046,356.00 | 19,262,096.00 | 19,320,380.00 | 19,407,764.00 | 19,589,902.00 | 19,614,130.00 | 19,829,872.00 | 19,829,872.00 |

| 15 | 0.00 | 64,434.53 | 272,821.25 | 689,155.88 | 1,832,749.12 | 3,660,129.75 | 4,680,136.50 | 7,358,847.00 | 9,028,388.00 | 10,283,629.00 | ... | 29,044,080.00 | 29,391,798.00 | 29,699,044.00 | 30,035,450.00 | 30,126,334.00 | 30,262,592.00 | 30,546,598.00 | 30,584,378.00 | 30,920,786.00 | 30,920,786.00 |

| 16 | 172,118.23 | 276,003.81 | 1,468,529.00 | 2,473,900.50 | 3,320,296.25 | 4,172,269.25 | 6,421,418.50 | 7,663,898.50 | 9,201,556.00 | 9,852,889.00 | ... | 22,027,448.00 | 22,291,162.00 | 22,524,182.00 | 22,779,316.00 | 22,848,244.00 | 22,951,584.00 | 23,166,978.00 | 23,195,630.00 | 23,450,766.00 | 23,450,766.00 |

| 17 | 0.00 | 115,323.28 | 533,563.88 | 1,628,512.75 | 3,782,490.00 | 5,496,876.00 | 7,221,503.00 | 8,019,986.00 | 8,644,119.00 | 9,252,501.00 | ... | 24,161,340.00 | 24,450,602.00 | 24,706,196.00 | 24,986,046.00 | 25,061,652.00 | 25,175,004.00 | 25,411,266.00 | 25,442,694.00 | 25,722,546.00 | 25,722,546.00 |

| 18 | 0.00 | 113,693.24 | 534,180.81 | 1,126,199.38 | 2,086,124.12 | 2,636,258.50 | 3,415,784.25 | 4,550,892.50 | 6,384,181.50 | 7,276,585.00 | ... | 22,122,974.00 | 22,387,832.00 | 22,621,862.00 | 22,878,104.00 | 22,947,330.00 | 23,051,118.00 | 23,267,448.00 | 23,296,226.00 | 23,552,468.00 | 23,552,468.00 |

| 19 | 0.00 | 65,176.80 | 593,353.38 | 1,377,092.75 | 2,679,311.25 | 4,561,606.50 | 5,190,826.50 | 6,896,302.50 | 7,889,414.50 | 10,040,150.00 | ... | 25,454,732.00 | 25,759,478.00 | 26,028,754.00 | 26,323,586.00 | 26,403,238.00 | 26,522,658.00 | 26,771,566.00 | 26,804,676.00 | 27,099,510.00 | 27,099,510.00 |

| 20 | 3,269.31 | 303,522.59 | 789,422.50 | 1,556,370.50 | 2,431,901.50 | 4,251,400.00 | 6,718,960.50 | 9,005,605.00 | 10,659,464.00 | 12,031,561.00 | ... | 30,440,728.00 | 30,805,166.00 | 31,127,186.00 | 31,479,768.00 | 31,575,022.00 | 31,717,834.00 | 32,015,498.00 | 32,055,094.00 | 32,407,678.00 | 32,407,678.00 |

| 21 | 0.00 | 99,668.09 | 1,164,366.00 | 1,829,524.38 | 3,262,251.50 | 4,136,417.75 | 5,829,693.00 | 7,624,765.00 | 9,753,255.00 | 11,093,190.00 | ... | 31,515,310.00 | 31,892,614.00 | 32,226,002.00 | 32,591,030.00 | 32,689,646.00 | 32,837,498.00 | 33,145,670.00 | 33,186,664.00 | 33,551,694.00 | 33,551,694.00 |

| 22 | 0.00 | 73,295.09 | 370,612.00 | 1,025,605.56 | 2,284,124.00 | 3,889,548.75 | 5,347,188.00 | 6,029,389.00 | 7,281,124.50 | 8,422,002.00 | ... | 25,589,250.00 | 25,895,606.00 | 26,166,304.00 | 26,462,694.00 | 26,542,768.00 | 26,662,818.00 | 26,913,042.00 | 26,946,328.00 | 27,242,720.00 | 27,242,720.00 |

| 23 | 738,959.81 | 944,850.44 | 1,558,499.88 | 2,354,515.50 | 4,080,220.25 | 6,339,416.00 | 7,833,012.00 | 9,289,169.00 | 11,375,192.00 | 12,184,491.00 | ... | 31,142,896.00 | 31,515,740.00 | 31,845,188.00 | 32,205,904.00 | 32,303,356.00 | 32,449,462.00 | 32,753,992.00 | 32,794,502.00 | 33,155,220.00 | 33,155,220.00 |

| 24 | 32,878.80 | 139,590.50 | 722,637.12 | 2,000,021.75 | 3,699,119.00 | 4,275,938.50 | 5,317,856.00 | 7,233,627.50 | 8,291,626.50 | 8,943,028.00 | ... | 28,343,990.00 | 28,683,326.00 | 28,983,166.00 | 29,311,462.00 | 29,400,156.00 | 29,533,130.00 | 29,810,292.00 | 29,847,162.00 | 30,175,460.00 | 30,175,460.00 |

| 25 | 0.00 | 67,300.14 | 794,740.44 | 1,203,488.25 | 1,704,533.00 | 2,902,497.25 | 3,623,180.75 | 4,985,555.50 | 6,397,038.50 | 7,434,320.50 | ... | 22,882,388.00 | 23,156,338.00 | 23,398,402.00 | 23,663,440.00 | 23,735,042.00 | 23,842,394.00 | 24,066,148.00 | 24,095,912.00 | 24,360,950.00 | 24,360,950.00 |

| 26 | 0.00 | 222,579.27 | 724,894.44 | 1,461,998.50 | 2,063,614.62 | 3,727,648.00 | 6,192,158.00 | 7,523,790.50 | 8,246,265.50 | 10,140,250.00 | ... | 24,910,628.00 | 25,208,860.00 | 25,472,380.00 | 25,760,910.00 | 25,838,860.00 | 25,955,728.00 | 26,199,316.00 | 26,231,720.00 | 26,520,252.00 | 26,520,252.00 |

| 27 | 0.00 | 21,265.95 | 431,409.00 | 1,352,109.50 | 2,414,385.00 | 3,756,162.50 | 4,874,574.00 | 8,397,044.00 | 10,588,447.00 | 11,796,448.00 | ... | 36,532,008.00 | 36,969,372.00 | 37,355,828.00 | 37,778,964.00 | 37,893,280.00 | 38,064,668.00 | 38,421,896.00 | 38,469,416.00 | 38,892,552.00 | 38,892,552.00 |

| 28 | 0.00 | 281,462.16 | 1,313,574.88 | 2,223,132.25 | 3,606,104.75 | 5,205,782.00 | 8,583,559.00 | 9,386,136.00 | 12,726,494.00 | 14,633,823.00 | ... | 42,857,536.00 | 43,370,628.00 | 43,824,000.00 | 44,320,400.00 | 44,454,508.00 | 44,655,572.00 | 45,074,652.00 | 45,130,400.00 | 45,626,804.00 | 45,626,804.00 |

| 29 | 109,492.41 | 749,180.50 | 2,196,492.25 | 3,589,296.25 | 5,510,728.00 | 7,582,901.50 | 9,060,910.00 | 12,036,241.00 | 14,089,931.00 | 16,412,483.00 | ... | 41,941,440.00 | 42,443,564.00 | 42,887,248.00 | 43,373,040.00 | 43,504,284.00 | 43,701,052.00 | 44,111,176.00 | 44,165,732.00 | 44,651,524.00 | 44,651,524.00 |

| 30 | 0.00 | 152,130.56 | 1,171,098.38 | 2,392,989.25 | 4,038,955.50 | 5,796,675.50 | 6,487,593.00 | 7,935,940.00 | 9,448,145.00 | 12,658,112.00 | ... | 35,222,156.00 | 35,643,840.00 | 36,016,440.00 | 36,424,404.00 | 36,534,620.00 | 36,699,864.00 | 37,044,284.00 | 37,090,100.00 | 37,498,064.00 | 37,498,064.00 |

| 31 | 0.00 | 102,149.25 | 587,694.50 | 1,739,223.50 | 3,054,168.25 | 4,391,554.00 | 6,488,115.50 | 8,532,481.00 | 9,589,075.00 | 13,596,397.00 | ... | 39,249,004.00 | 39,718,896.00 | 40,134,096.00 | 40,588,700.00 | 40,711,516.00 | 40,895,652.00 | 41,279,448.00 | 41,330,504.00 | 41,785,112.00 | 41,785,112.00 |

| 32 | 0.00 | 137,841.00 | 841,636.31 | 1,810,914.25 | 3,203,533.50 | 4,339,657.00 | 7,193,541.50 | 8,792,350.00 | 11,048,168.00 | 12,931,036.00 | ... | 37,328,284.00 | 37,775,180.00 | 38,170,060.00 | 38,602,416.00 | 38,719,224.00 | 38,894,348.00 | 39,259,360.00 | 39,307,916.00 | 39,740,276.00 | 39,740,276.00 |

| 33 | 0.00 | 134,954.59 | 1,254,893.38 | 2,674,034.25 | 4,403,876.00 | 5,591,899.50 | 6,810,711.50 | 8,387,086.00 | 10,152,020.00 | 11,882,163.00 | ... | 34,300,484.00 | 34,711,132.00 | 35,073,984.00 | 35,471,272.00 | 35,578,604.00 | 35,739,524.00 | 36,074,932.00 | 36,119,548.00 | 36,516,840.00 | 36,516,840.00 |

| 34 | 38,893.38 | 652,741.81 | 1,456,564.38 | 2,050,356.00 | 3,044,004.00 | 4,796,483.00 | 7,570,764.00 | 9,698,433.00 | 11,739,320.00 | 13,739,976.00 | ... | 39,663,468.00 | 40,138,320.00 | 40,557,904.00 | 41,017,308.00 | 41,141,420.00 | 41,327,500.00 | 41,715,348.00 | 41,766,940.00 | 42,226,348.00 | 42,226,348.00 |

| 35 | 0.00 | 167,482.09 | 926,322.88 | 2,872,798.50 | 5,040,902.00 | 6,421,425.50 | 8,743,438.00 | 11,200,673.00 | 13,557,683.00 | 15,868,231.00 | ... | 45,807,152.00 | 46,355,560.00 | 46,840,136.00 | 47,370,700.00 | 47,514,040.00 | 47,728,944.00 | 48,176,868.00 | 48,236,452.00 | 48,767,020.00 | 48,767,020.00 |

| 36 | 21,154.29 | 320,832.66 | 1,568,433.12 | 2,393,324.00 | 4,121,508.50 | 6,130,127.00 | 8,346,805.50 | 10,692,571.00 | 12,942,659.00 | 15,148,392.00 | ... | 43,729,172.00 | 44,252,700.00 | 44,715,292.00 | 45,221,788.00 | 45,358,624.00 | 45,563,776.00 | 45,991,380.00 | 46,048,260.00 | 46,554,760.00 | 46,554,760.00 |

| 37 | 83,818.48 | 819,248.56 | 1,881,916.25 | 3,489,761.75 | 5,992,949.50 | 8,913,616.00 | 12,136,815.00 | 15,547,716.00 | 18,819,494.00 | 22,026,778.00 | ... | 63,585,152.00 | 64,346,396.00 | 65,019,040.00 | 65,755,520.00 | 65,954,488.00 | 66,252,796.00 | 66,874,564.00 | 66,957,276.00 | 67,693,760.00 | 67,693,760.00 |

| 38 | 28,038.49 | 216,292.89 | 1,041,738.56 | 2,063,424.12 | 3,543,507.50 | 5,270,437.00 | 7,176,248.00 | 9,193,043.00 | 11,127,578.00 | 13,023,979.00 | ... | 37,596,596.00 | 38,046,704.00 | 38,444,424.00 | 38,879,888.00 | 38,997,536.00 | 39,173,920.00 | 39,541,556.00 | 39,590,460.00 | 40,025,928.00 | 40,025,928.00 |

| 39 | 0.00 | 237,799.91 | 910,950.94 | 1,804,366.50 | 3,098,629.25 | 4,608,747.50 | 6,275,289.00 | 8,038,881.00 | 9,730,540.00 | 11,388,853.00 | ... | 32,876,436.00 | 33,270,034.00 | 33,617,820.00 | 33,998,616.00 | 34,101,492.00 | 34,255,732.00 | 34,577,212.00 | 34,619,976.00 | 35,000,772.00 | 35,000,772.00 |

| 40 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

40 rows × 40 columns

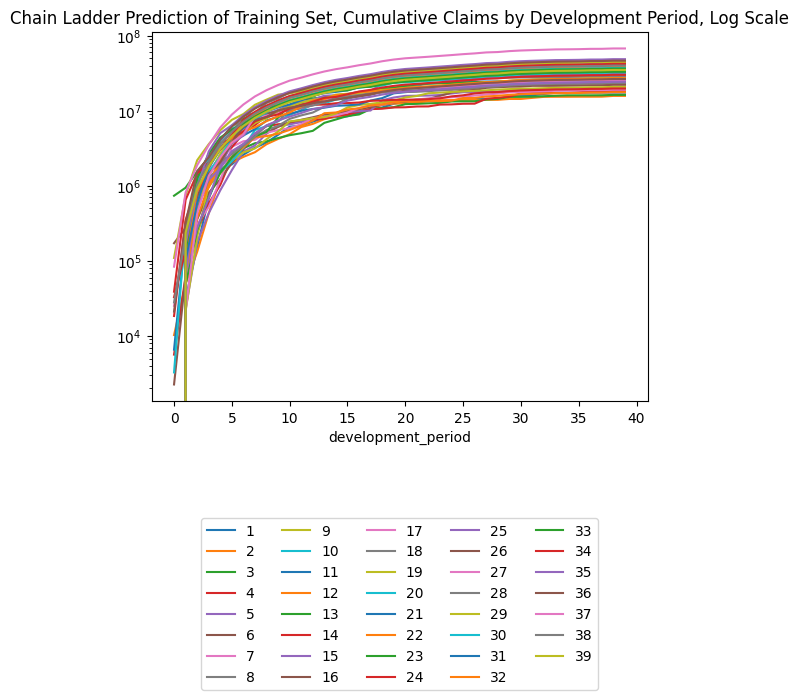

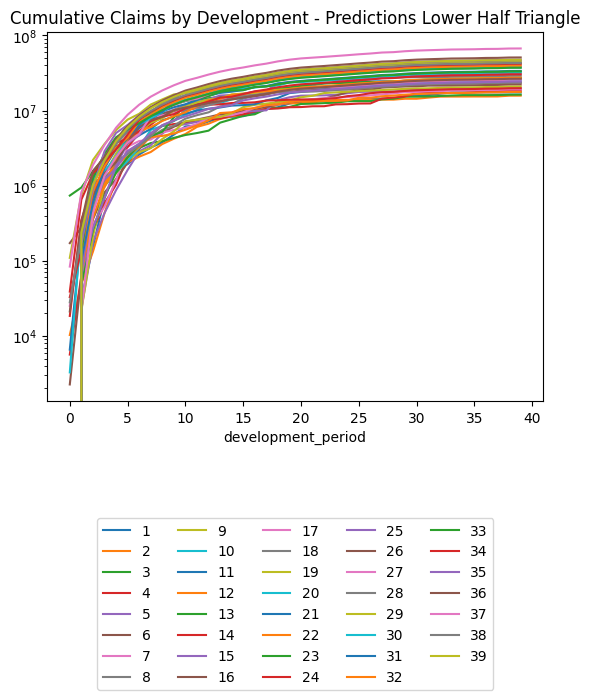

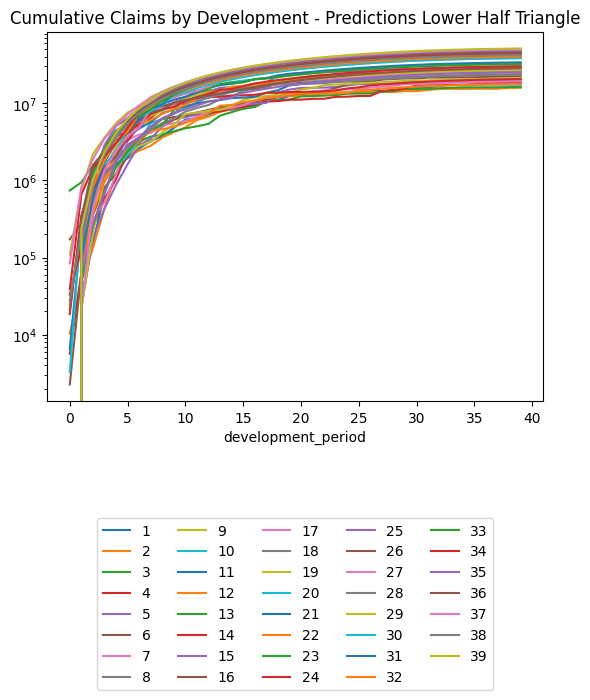

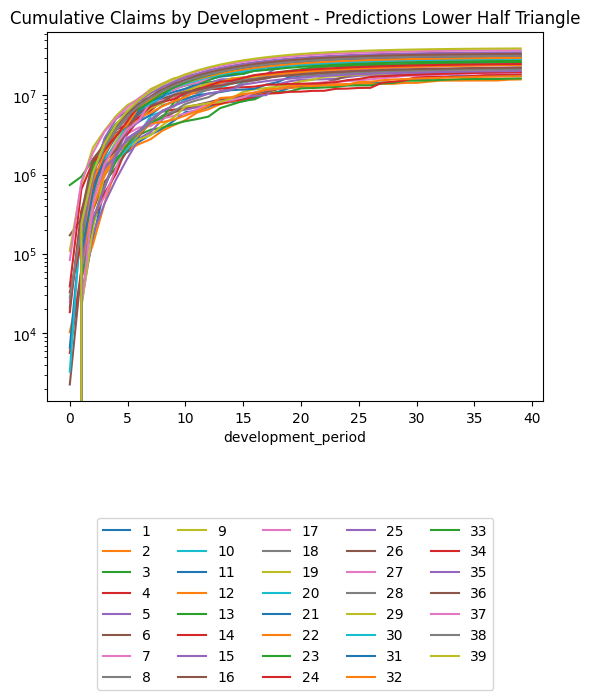

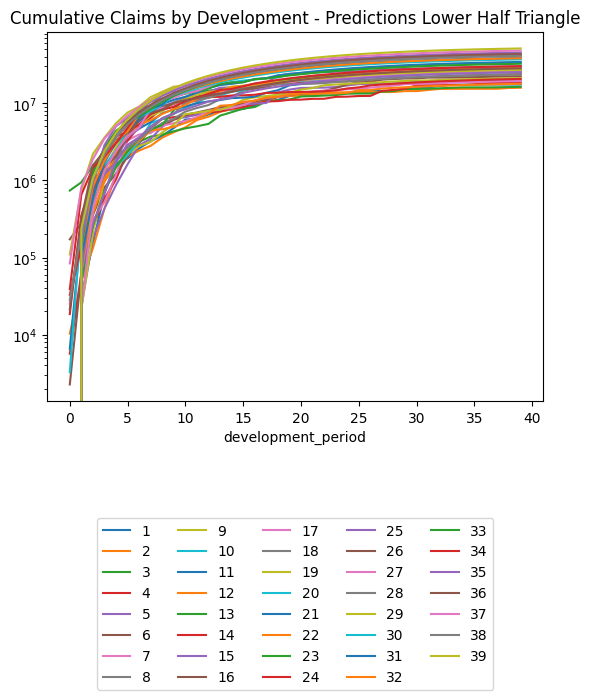

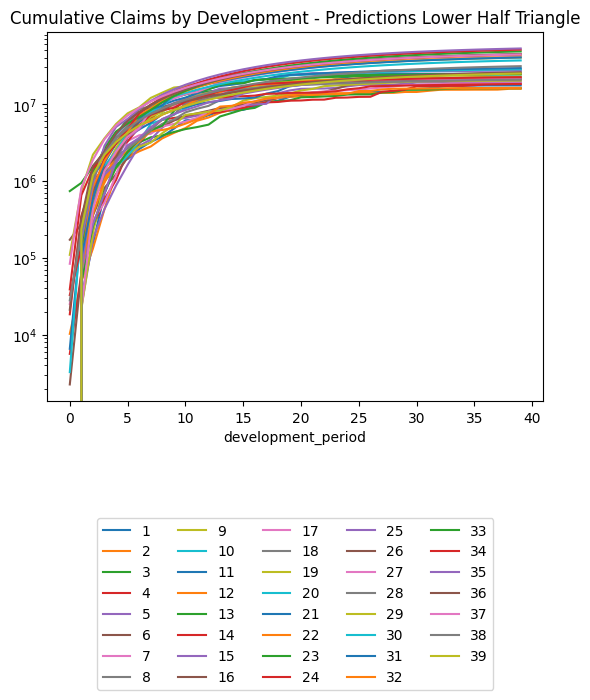

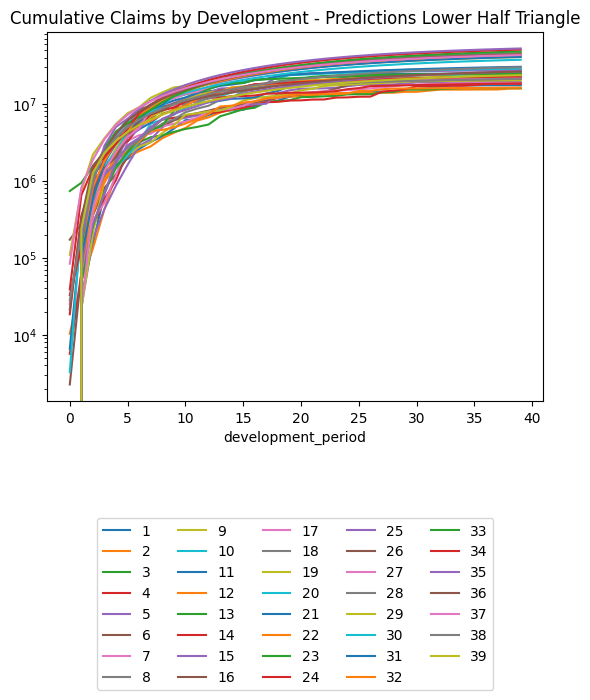

(triangle_cl

.loc[lambda df: df.occurrence_period != cutoff] # Exclude occurrence period == 40 since there's no data there at cutoff

.pivot(index = "development_period", columns = "occurrence_period", values = "payment_size_cumulative")

.plot(logy=True)

)

plt.legend(loc="lower center", bbox_to_anchor=(0.5, -0.8), ncol=5)

plt.title("Chain Ladder Prediction of Training Set, Cumulative Claims by Development Period, Log Scale")Text(0.5, 1.0, 'Chain Ladder Prediction of Training Set, Cumulative Claims by Development Period, Log Scale')

# MSE / 10^12

# Exclude occurrence period == 40 since there's no data there at cutoff

np.sum((

triangle_cl.loc[lambda df: df.occurrence_period != cutoff].payment_size_cumulative.values -

triangle.loc[lambda df: df.occurrence_period != cutoff].payment_size_cumulative.values

)**2)/10**1227656.166297305088Chain Ladder, GLM, Aggregated Data

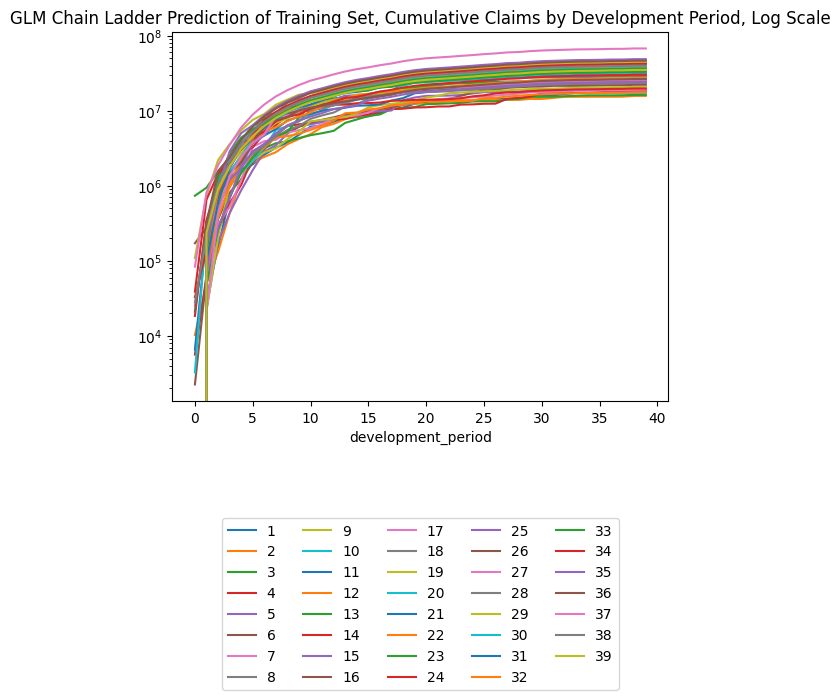

Chain ladders can be replicated with a GLM as discussed in our earlier article. To do this use:

- Quasipoisson distribution was used in the article (here just Poisson)

- Log Link

- Incremental ~ 0 + accident_period_factor + development_period_factor. I.e. No intercept. One factor per accident and development period.

Here we will use Torch, a package for neural networks, initially to do a GLM. Idea being that if it works, one could then test deep learning components to it. * Poisson loss * Log Link * Incremental ~ accident_period_factor + development_period_factor, but with intercept (seems to converge better this way)

Doing this at the claim level should give similar results just divided by number of claims.

class LogLinkGLM(nn.Module):

# Define the parameters in __init__

def __init__(

self,

n_input, # number of inputs

n_output, # number of outputs

init_bias, # init mean value to speed up convergence

has_bias=True, # use bias (intercept) or not

**kwargs, # Ignored; Not used

):

super(LogLinkGLM, self).__init__()

self.linear = torch.nn.Linear(n_input, n_output, has_bias) # Linear coefficients

nn.init.zeros_(self.linear.weight) # Initialise to zero

if has_bias:

self.linear.bias.data = torch.tensor(init_bias)

# The forward functions defines how you get y from X.

def forward(self, x):

return torch.exp(self.linear(x)) # log(Y) = XB -> Y = exp(XB)# Small dataset, so we can do a high number of iterations to converge to the mechanical chain ladder figures more precisely

GLM_CL_agg = Pipeline(

steps=[

("keep", ColumnKeeper(["occurrence_period", "development_period"])),

('one_hot', OneHotEncoder(sparse_output=False)), # OneHot to get one factor per

("model", TabularNetRegressor(LogLinkGLM, has_bias=True, max_iter=10000, max_lr=0.10))

]

)

GLM_CL_agg.fit(

triangle_train,

triangle_train.loc[:, ["payment_size"]]

)Train RMSE: 685304.6875 Train Loss: -9891084.0

Train RMSE: 484032.34375 Train Loss: -10054772.0

Train RMSE: 484031.5625 Train Loss: -10054813.0

Train RMSE: 484031.4375 Train Loss: -10054817.0

Train RMSE: 484031.34375 Train Loss: -10054817.0

Train RMSE: 484031.375 Train Loss: -10054817.0

Train RMSE: 484031.40625 Train Loss: -10054817.0

Train RMSE: 484031.375 Train Loss: -10054818.0

Train RMSE: 484031.375 Train Loss: -10054817.0

Train RMSE: 484031.375 Train Loss: -10054818.0Pipeline(steps=[('keep',

ColumnKeeper(cols=['occurrence_period',

'development_period'])),

('one_hot', OneHotEncoder(sparse_output=False)),

('model',

TabularNetRegressor(max_iter=10000, max_lr=0.1,

module=<class '__main__.LogLinkGLM'>))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('keep',

ColumnKeeper(cols=['occurrence_period',

'development_period'])),

('one_hot', OneHotEncoder(sparse_output=False)),

('model',

TabularNetRegressor(max_iter=10000, max_lr=0.1,

module=<class '__main__.LogLinkGLM'>))])ColumnKeeper(cols=['occurrence_period', 'development_period'])

OneHotEncoder(sparse_output=False)

TabularNetRegressor(max_iter=10000, max_lr=0.1,

module=<class '__main__.LogLinkGLM'>)triangle_train| occurrence_period | development_period | payment_period | payment_size_cumulative | payment_size | |

|---|---|---|---|---|---|

| 0 | 1 | 0 | 1 | 0.00 | 0.00 |

| 1 | 1 | 1 | 2 | 31,551.25 | 31,551.25 |

| 2 | 1 | 2 | 3 | 152,793.12 | 121,241.88 |

| 3 | 1 | 3 | 4 | 680,415.06 | 527,621.94 |

| 4 | 1 | 4 | 5 | 1,650,518.75 | 970,103.75 |

| ... | ... | ... | ... | ... | ... |

| 1481 | 38 | 1 | 39 | 216,292.89 | 188,254.41 |

| 1482 | 38 | 2 | 40 | 1,041,738.56 | 825,445.62 |

| 1520 | 39 | 0 | 39 | 0.00 | 0.00 |

| 1521 | 39 | 1 | 40 | 237,799.91 | 237,799.91 |

| 1560 | 40 | 0 | 40 | 0.00 | 0.00 |

820 rows × 5 columns

triangle_glm_agg = pd.concat(

[

triangle_train,

triangle_test.assign(payment_size = GLM_CL_agg.predict(triangle_test))

],

axis="rows"

).sort_values(by=["occurrence_period", "development_period"])

triangle_glm_agg["payment_size_cumulative"] = (

triangle_glm_agg[["occurrence_period", "payment_size"]].groupby('occurrence_period').cumsum()

)

triangle_glm_agg.pivot(index = "occurrence_period", columns = "development_period", values = "payment_size_cumulative")| development_period | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 30 | 31 | 32 | 33 | 34 | 35 | 36 | 37 | 38 | 39 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| occurrence_period | |||||||||||||||||||||

| 1 | 0.00 | 31,551.25 | 152,793.14 | 680,415.06 | 1,650,518.75 | 1,932,605.62 | 2,602,592.25 | 3,222,089.50 | 3,775,028.25 | 4,788,738.50 | ... | 15,739,832.00 | 16,082,119.00 | 16,780,244.00 | 17,400,658.00 | 17,422,826.00 | 17,422,826.00 | 17,485,892.00 | 17,485,892.00 | 17,485,892.00 | 17,485,892.00 |

| 2 | 10,286.75 | 49,493.38 | 132,020.67 | 430,670.75 | 1,225,081.75 | 2,044,801.25 | 2,433,025.00 | 2,808,325.75 | 3,586,153.00 | 4,218,801.50 | ... | 14,386,516.00 | 14,880,894.00 | 15,342,595.00 | 15,378,178.00 | 15,378,178.00 | 15,409,743.00 | 15,409,743.00 | 15,433,019.00 | 15,795,103.00 | 15,795,134.00 |

| 3 | 0.00 | 62,389.38 | 640,980.81 | 987,639.94 | 1,536,782.25 | 2,385,404.00 | 3,177,442.25 | 3,698,036.50 | 3,918,063.50 | 4,333,062.00 | ... | 15,336,449.00 | 15,437,039.00 | 15,646,281.00 | 15,867,258.00 | 16,067,564.00 | 16,067,564.00 | 16,071,147.00 | 16,108,434.00 | 16,285,614.00 | 16,285,647.00 |

| 4 | 5,646.32 | 63,203.44 | 245,984.02 | 584,083.56 | 1,030,191.25 | 2,371,411.75 | 3,179,435.75 | 4,964,554.00 | 6,244,163.00 | 6,612,675.00 | ... | 17,058,208.00 | 17,107,564.00 | 17,202,888.00 | 17,256,650.00 | 17,256,650.00 | 17,598,124.00 | 18,155,534.00 | 18,177,990.00 | 18,377,934.00 | 18,377,972.00 |

| 5 | 0.00 | 22,911.55 | 177,779.52 | 431,647.50 | 872,806.00 | 1,619,887.12 | 2,862,273.00 | 3,462,464.75 | 4,956,769.00 | 6,237,362.50 | ... | 19,066,432.00 | 19,121,176.00 | 19,157,450.00 | 19,222,536.00 | 19,222,536.00 | 19,235,524.00 | 19,416,042.00 | 19,440,056.00 | 19,653,882.00 | 19,653,922.00 |

| 6 | 2,259.24 | 62,889.90 | 357,107.84 | 813,191.12 | 1,218,815.75 | 2,030,866.75 | 3,065,646.00 | 4,652,615.50 | 5,097,719.50 | 6,097,211.00 | ... | 21,011,932.00 | 21,511,248.00 | 21,511,248.00 | 21,511,248.00 | 21,611,440.00 | 21,709,188.00 | 21,912,920.00 | 21,940,022.00 | 22,181,346.00 | 22,181,390.00 |

| 7 | 25,200.05 | 212,996.06 | 303,872.44 | 522,915.66 | 1,180,820.75 | 3,314,907.25 | 3,931,794.50 | 4,110,920.00 | 5,112,089.00 | 5,522,927.00 | ... | 17,723,798.00 | 18,120,490.00 | 18,120,490.00 | 18,526,526.00 | 18,582,586.00 | 18,666,634.00 | 18,841,812.00 | 18,865,116.00 | 19,072,620.00 | 19,072,656.00 |

| 8 | 0.00 | 101,324.27 | 482,201.94 | 1,302,672.88 | 1,762,479.38 | 2,816,009.75 | 3,599,199.00 | 4,404,539.50 | 5,549,806.50 | 6,296,549.50 | ... | 27,771,816.00 | 27,771,816.00 | 27,839,500.00 | 28,154,842.00 | 28,240,034.00 | 28,367,762.00 | 28,633,984.00 | 28,669,398.00 | 28,984,740.00 | 28,984,798.00 |

| 9 | 0.00 | 21,563.11 | 207,902.11 | 1,019,339.94 | 1,467,485.12 | 2,183,192.50 | 2,792,763.75 | 3,155,922.75 | 4,188,714.25 | 5,294,733.50 | ... | 19,726,482.00 | 19,798,286.00 | 20,005,244.00 | 20,231,846.00 | 20,293,066.00 | 20,384,850.00 | 20,576,154.00 | 20,601,604.00 | 20,828,206.00 | 20,828,248.00 |

| 10 | 0.00 | 62,438.00 | 642,477.19 | 1,220,444.00 | 1,512,127.75 | 2,125,861.00 | 3,205,997.50 | 5,542,220.00 | 6,233,909.00 | 7,278,580.50 | ... | 23,628,224.00 | 23,911,102.00 | 24,161,056.00 | 24,434,730.00 | 24,508,666.00 | 24,619,518.00 | 24,850,564.00 | 24,881,298.00 | 25,154,974.00 | 25,155,024.00 |

| 11 | 6,573.56 | 233,421.70 | 858,658.44 | 1,487,226.75 | 2,326,138.75 | 4,001,959.50 | 4,689,719.00 | 5,703,576.50 | 7,421,133.00 | 8,338,611.50 | ... | 25,062,908.00 | 25,362,962.00 | 25,628,092.00 | 25,918,384.00 | 25,996,808.00 | 26,114,392.00 | 26,359,466.00 | 26,392,068.00 | 26,682,360.00 | 26,682,414.00 |

| 12 | 0.00 | 337,785.12 | 602,649.94 | 947,861.81 | 1,960,324.25 | 2,438,331.25 | 3,263,283.25 | 4,443,729.00 | 4,600,126.00 | 4,950,643.00 | ... | 16,612,003.00 | 16,810,882.00 | 16,986,614.00 | 17,179,024.00 | 17,231,004.00 | 17,308,940.00 | 17,471,378.00 | 17,492,986.00 | 17,685,396.00 | 17,685,432.00 |

| 13 | 0.00 | 48,432.94 | 257,028.44 | 713,263.12 | 1,489,202.25 | 2,399,702.50 | 3,479,596.50 | 4,570,094.00 | 5,228,066.00 | 8,120,785.50 | ... | 25,494,660.00 | 25,799,882.00 | 26,069,580.00 | 26,364,872.00 | 26,444,650.00 | 26,564,258.00 | 26,813,552.00 | 26,846,716.00 | 27,142,010.00 | 27,142,064.00 |

| 14 | 18,463.75 | 352,189.09 | 1,015,863.62 | 1,451,495.75 | 2,553,104.50 | 3,266,427.50 | 5,020,334.50 | 6,462,674.50 | 8,515,544.00 | 9,761,135.00 | ... | 18,626,318.00 | 18,849,314.00 | 19,046,354.00 | 19,262,094.00 | 19,320,378.00 | 19,407,764.00 | 19,589,898.00 | 19,614,128.00 | 19,829,868.00 | 19,829,908.00 |

| 15 | 0.00 | 64,434.53 | 272,821.25 | 689,155.88 | 1,832,749.12 | 3,660,129.75 | 4,680,136.50 | 7,358,847.00 | 9,028,388.00 | 10,283,630.00 | ... | 29,044,080.00 | 29,391,798.00 | 29,699,042.00 | 30,035,448.00 | 30,126,330.00 | 30,262,590.00 | 30,546,594.00 | 30,584,374.00 | 30,920,780.00 | 30,920,842.00 |

| 16 | 172,118.23 | 276,003.81 | 1,468,529.00 | 2,473,900.50 | 3,320,296.00 | 4,172,269.00 | 6,421,418.50 | 7,663,898.50 | 9,201,556.00 | 9,852,889.00 | ... | 22,027,450.00 | 22,291,162.00 | 22,524,182.00 | 22,779,316.00 | 22,848,242.00 | 22,951,584.00 | 23,166,976.00 | 23,195,630.00 | 23,450,764.00 | 23,450,812.00 |

| 17 | 0.00 | 115,323.28 | 533,563.88 | 1,628,512.75 | 3,782,490.00 | 5,496,876.00 | 7,221,503.00 | 8,019,986.00 | 8,644,119.00 | 9,252,501.00 | ... | 24,161,342.00 | 24,450,602.00 | 24,706,194.00 | 24,986,044.00 | 25,061,650.00 | 25,175,002.00 | 25,411,260.00 | 25,442,690.00 | 25,722,540.00 | 25,722,592.00 |

| 18 | 0.00 | 113,693.24 | 534,180.81 | 1,126,199.38 | 2,086,124.25 | 2,636,258.50 | 3,415,784.25 | 4,550,892.50 | 6,384,181.50 | 7,276,585.00 | ... | 22,122,974.00 | 22,387,832.00 | 22,621,862.00 | 22,878,102.00 | 22,947,328.00 | 23,051,118.00 | 23,267,444.00 | 23,296,222.00 | 23,552,462.00 | 23,552,510.00 |

| 19 | 0.00 | 65,176.80 | 593,353.38 | 1,377,092.75 | 2,679,311.00 | 4,561,606.50 | 5,190,826.50 | 6,896,303.00 | 7,889,414.50 | 10,040,150.00 | ... | 25,454,734.00 | 25,759,478.00 | 26,028,754.00 | 26,323,584.00 | 26,403,236.00 | 26,522,656.00 | 26,771,562.00 | 26,804,674.00 | 27,099,504.00 | 27,099,558.00 |

| 20 | 3,269.31 | 303,522.59 | 789,422.50 | 1,556,370.62 | 2,431,901.50 | 4,251,400.00 | 6,718,960.50 | 9,005,604.00 | 10,659,464.00 | 12,031,561.00 | ... | 30,440,730.00 | 30,805,166.00 | 31,127,186.00 | 31,479,768.00 | 31,575,020.00 | 31,717,834.00 | 32,015,494.00 | 32,055,090.00 | 32,407,674.00 | 32,407,738.00 |

| 21 | 0.00 | 99,668.09 | 1,164,366.00 | 1,829,524.50 | 3,262,251.75 | 4,136,418.00 | 5,829,693.00 | 7,624,765.00 | 9,753,254.00 | 11,093,190.00 | ... | 31,515,314.00 | 31,892,618.00 | 32,226,004.00 | 32,591,032.00 | 32,689,648.00 | 32,837,502.00 | 33,145,670.00 | 33,186,666.00 | 33,551,694.00 | 33,551,760.00 |

| 22 | 0.00 | 73,295.09 | 370,612.00 | 1,025,605.62 | 2,284,124.00 | 3,889,548.50 | 5,347,188.00 | 6,029,389.00 | 7,281,125.00 | 8,422,002.00 | ... | 25,589,258.00 | 25,895,614.00 | 26,166,310.00 | 26,462,700.00 | 26,542,772.00 | 26,662,824.00 | 26,913,044.00 | 26,946,332.00 | 27,242,722.00 | 27,242,776.00 |

| 23 | 738,959.81 | 944,850.44 | 1,558,499.75 | 2,354,515.50 | 4,080,220.25 | 6,339,416.00 | 7,833,012.00 | 9,289,169.00 | 11,375,192.00 | 12,184,491.00 | ... | 31,142,902.00 | 31,515,746.00 | 31,845,194.00 | 32,205,908.00 | 32,303,358.00 | 32,449,466.00 | 32,753,990.00 | 32,794,502.00 | 33,155,218.00 | 33,155,284.00 |

| 24 | 32,878.80 | 139,590.50 | 722,637.12 | 2,000,021.75 | 3,699,119.00 | 4,275,938.00 | 5,317,856.00 | 7,233,628.00 | 8,291,626.50 | 8,943,028.00 | ... | 28,343,994.00 | 28,683,330.00 | 28,983,168.00 | 29,311,464.00 | 29,400,156.00 | 29,533,132.00 | 29,810,290.00 | 29,847,160.00 | 30,175,456.00 | 30,175,516.00 |

| 25 | 0.00 | 67,300.14 | 794,740.44 | 1,203,488.25 | 1,704,533.00 | 2,902,497.50 | 3,623,180.75 | 4,985,555.50 | 6,397,038.50 | 7,434,320.50 | ... | 22,882,390.00 | 23,156,338.00 | 23,398,400.00 | 23,663,438.00 | 23,735,040.00 | 23,842,392.00 | 24,066,144.00 | 24,095,910.00 | 24,360,948.00 | 24,360,996.00 |

| 26 | 0.00 | 222,579.27 | 724,894.44 | 1,461,998.50 | 2,063,614.62 | 3,727,648.00 | 6,192,158.00 | 7,523,791.00 | 8,246,266.00 | 10,140,250.00 | ... | 24,910,632.00 | 25,208,862.00 | 25,472,382.00 | 25,760,910.00 | 25,838,858.00 | 25,955,728.00 | 26,199,312.00 | 26,231,716.00 | 26,520,246.00 | 26,520,298.00 |

| 27 | 0.00 | 21,265.95 | 431,409.00 | 1,352,109.50 | 2,414,385.00 | 3,756,162.50 | 4,874,574.00 | 8,397,044.00 | 10,588,448.00 | 11,796,448.00 | ... | 36,532,016.00 | 36,969,380.00 | 37,355,836.00 | 37,778,968.00 | 37,893,284.00 | 38,064,672.00 | 38,421,896.00 | 38,469,416.00 | 38,892,552.00 | 38,892,628.00 |

| 28 | 0.00 | 281,462.16 | 1,313,574.88 | 2,223,132.00 | 3,606,104.50 | 5,205,782.00 | 8,583,559.00 | 9,386,136.00 | 12,726,494.00 | 14,633,823.00 | ... | 42,857,548.00 | 43,370,640.00 | 43,824,008.00 | 44,320,408.00 | 44,454,516.00 | 44,655,584.00 | 45,074,660.00 | 45,130,408.00 | 45,626,808.00 | 45,626,900.00 |

| 29 | 109,492.41 | 749,180.50 | 2,196,492.25 | 3,589,296.50 | 5,510,728.00 | 7,582,901.50 | 9,060,910.00 | 12,036,241.00 | 14,089,931.00 | 16,412,483.00 | ... | 41,941,452.00 | 42,443,580.00 | 42,887,260.00 | 43,373,048.00 | 43,504,288.00 | 43,701,056.00 | 44,111,176.00 | 44,165,732.00 | 44,651,524.00 | 44,651,612.00 |

| 30 | 0.00 | 152,130.56 | 1,171,098.38 | 2,392,989.50 | 4,038,955.50 | 5,796,676.00 | 6,487,593.00 | 7,935,940.00 | 9,448,145.00 | 12,658,112.00 | ... | 35,222,176.00 | 35,643,860.00 | 36,016,460.00 | 36,424,424.00 | 36,534,636.00 | 36,699,884.00 | 37,044,296.00 | 37,090,116.00 | 37,498,076.00 | 37,498,152.00 |

| 31 | 0.00 | 102,149.25 | 587,694.50 | 1,739,223.50 | 3,054,168.25 | 4,391,554.00 | 6,488,115.50 | 8,532,481.00 | 9,589,075.00 | 13,596,397.00 | ... | 39,249,012.00 | 39,718,904.00 | 40,134,100.00 | 40,588,704.00 | 40,711,520.00 | 40,895,656.00 | 41,279,448.00 | 41,330,504.00 | 41,785,108.00 | 41,785,192.00 |

| 32 | 0.00 | 137,841.00 | 841,636.31 | 1,810,914.25 | 3,203,533.50 | 4,339,657.00 | 7,193,541.50 | 8,792,350.00 | 11,048,168.00 | 12,931,037.00 | ... | 37,328,300.00 | 37,775,196.00 | 38,170,076.00 | 38,602,432.00 | 38,719,236.00 | 38,894,364.00 | 39,259,372.00 | 39,307,928.00 | 39,740,288.00 | 39,740,368.00 |

| 33 | 0.00 | 134,954.59 | 1,254,893.38 | 2,674,034.25 | 4,403,876.00 | 5,591,899.50 | 6,810,711.50 | 8,387,086.00 | 10,152,019.00 | 11,882,161.00 | ... | 34,300,500.00 | 34,711,148.00 | 35,074,000.00 | 35,471,288.00 | 35,578,616.00 | 35,739,540.00 | 36,074,940.00 | 36,119,560.00 | 36,516,848.00 | 36,516,920.00 |

| 34 | 38,893.38 | 652,741.81 | 1,456,564.38 | 2,050,356.00 | 3,044,004.00 | 4,796,483.00 | 7,570,764.00 | 9,698,433.00 | 11,739,319.00 | 13,739,974.00 | ... | 39,663,492.00 | 40,138,348.00 | 40,557,928.00 | 41,017,336.00 | 41,141,448.00 | 41,327,528.00 | 41,715,372.00 | 41,766,968.00 | 42,226,372.00 | 42,226,456.00 |

| 35 | 0.00 | 167,482.09 | 926,322.88 | 2,872,798.50 | 5,040,902.00 | 6,421,425.50 | 8,743,438.00 | 11,200,672.00 | 13,557,681.00 | 15,868,229.00 | ... | 45,807,164.00 | 46,355,568.00 | 46,840,140.00 | 47,370,704.00 | 47,514,044.00 | 47,728,948.00 | 48,176,864.00 | 48,236,452.00 | 48,767,016.00 | 48,767,112.00 |

| 36 | 21,154.29 | 320,832.62 | 1,568,433.12 | 2,393,324.00 | 4,121,508.50 | 6,130,129.00 | 8,346,808.00 | 10,692,576.00 | 12,942,664.00 | 15,148,399.00 | ... | 43,729,208.00 | 44,252,736.00 | 44,715,328.00 | 45,221,824.00 | 45,358,660.00 | 45,563,816.00 | 45,991,416.00 | 46,048,296.00 | 46,554,796.00 | 46,554,888.00 |

| 37 | 83,818.48 | 819,248.56 | 1,881,916.25 | 3,489,761.75 | 5,992,953.00 | 8,913,622.00 | 12,136,824.00 | 15,547,728.00 | 18,819,508.00 | 22,026,796.00 | ... | 63,585,224.00 | 64,346,468.00 | 65,019,108.00 | 65,755,588.00 | 65,954,552.00 | 66,252,864.00 | 66,874,624.00 | 66,957,336.00 | 67,693,816.00 | 67,693,952.00 |

| 38 | 28,038.49 | 216,292.89 | 1,041,738.50 | 2,063,425.25 | 3,543,510.50 | 5,270,441.50 | 7,176,253.50 | 9,193,051.00 | 11,127,587.00 | 13,023,989.00 | ... | 37,596,636.00 | 38,046,744.00 | 38,444,464.00 | 38,879,928.00 | 38,997,576.00 | 39,173,960.00 | 39,541,592.00 | 39,590,500.00 | 40,025,964.00 | 40,026,044.00 |

| 39 | 0.00 | 237,799.91 | 910,953.75 | 1,804,373.88 | 3,098,643.00 | 4,608,768.00 | 6,275,317.00 | 8,038,917.00 | 9,730,584.00 | 11,388,904.00 | ... | 32,876,592.00 | 33,270,192.00 | 33,617,980.00 | 33,998,776.00 | 34,101,648.00 | 34,255,892.00 | 34,577,368.00 | 34,620,136.00 | 35,000,932.00 | 35,001,000.00 |

| 40 | 0.00 | 166.40 | 726.71 | 1,470.36 | 2,547.66 | 3,804.63 | 5,191.81 | 6,659.76 | 8,067.84 | 9,448.17 | ... | 27,333.73 | 27,661.35 | 27,950.83 | 28,267.79 | 28,353.42 | 28,481.80 | 28,749.39 | 28,784.99 | 29,101.95 | 29,102.01 |

40 rows × 40 columns

Check difference with chain ladder. These seem quite small, except for occurrence period 40 where our log link is unable to get the value to zero.

(triangle_glm_agg.pivot(index = "occurrence_period", columns = "development_period", values = "payment_size_cumulative") -

triangle_cl.pivot(index = "occurrence_period", columns = "development_period", values = "payment_size_cumulative"))| development_period | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 30 | 31 | 32 | 33 | 34 | 35 | 36 | 37 | 38 | 39 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| occurrence_period | |||||||||||||||||||||

| 1 | 0.00 | 0.00 | 0.02 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | -2.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 2 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.12 | 0.00 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 31.00 |

| 3 | 0.00 | 0.00 | 0.00 | -0.06 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | -2.00 | 31.00 |

| 4 | 0.00 | 0.00 | -0.02 | -0.06 | -0.06 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 2.00 | 0.00 | 38.00 |

| 5 | 0.00 | 0.00 | 0.00 | 0.00 | 0.06 | 0.12 | 0.00 | 0.00 | -0.50 | 0.00 | ... | -2.00 | -2.00 | 0.00 | -2.00 | -2.00 | 0.00 | -2.00 | -2.00 | -4.00 | 36.00 |

| 6 | 0.00 | -0.00 | 0.00 | 0.00 | 0.00 | -0.12 | 0.00 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 2.00 | -2.00 | -2.00 | -4.00 | 40.00 |

| 7 | 0.00 | 0.00 | 0.00 | 0.00 | -0.12 | 0.00 | 0.25 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | -4.00 | -4.00 | -4.00 | 32.00 |

| 8 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.00 | -2.00 | -2.00 | -4.00 | -4.00 | -6.00 | 52.00 |

| 9 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ... | -2.00 | 0.00 | -2.00 | -2.00 | -2.00 | -2.00 | -4.00 | -2.00 | -4.00 | 38.00 |

| 10 | 0.00 | 0.00 | 0.00 | 0.12 | 0.00 | 0.25 | 0.25 | 0.00 | -0.50 | -0.50 | ... | 0.00 | 0.00 | 0.00 | -2.00 | -2.00 | 0.00 | -2.00 | -4.00 | -6.00 | 44.00 |

| 11 | 0.00 | 0.00 | 0.06 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | -2.00 | -4.00 | -2.00 | -6.00 | -6.00 | -8.00 | 46.00 |

| 12 | 0.00 | 0.00 | 0.00 | 0.00 | -0.12 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | ... | -2.00 | -4.00 | -4.00 | -4.00 | -6.00 | -4.00 | -6.00 | -6.00 | -8.00 | 28.00 |

| 13 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | -0.25 | 0.00 | 0.00 | 0.00 | ... | -2.00 | -4.00 | -4.00 | -6.00 | -6.00 | -6.00 | -12.00 | -10.00 | -12.00 | 42.00 |

| 14 | 0.00 | 0.00 | 0.00 | 0.00 | 0.25 | 0.00 | 0.50 | 0.50 | 0.00 | 1.00 | ... | -2.00 | -2.00 | -2.00 | -2.00 | -2.00 | 0.00 | -4.00 | -2.00 | -4.00 | 36.00 |

| 15 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 1.00 | ... | 0.00 | 0.00 | -2.00 | -2.00 | -4.00 | -2.00 | -4.00 | -4.00 | -6.00 | 56.00 |